The term "Computer Graphics" is concerned with all aspects of producing pictures or images using a computer.

It encompasses the creation, manipulation, and representation of images and animations on computers.

The term "Computer Graphics" is concerned with all aspects of producing pictures or images using a computer.

It encompasses the creation, manipulation, and representation of images and animations on computers.

Computer graphics can be broadly classified into two types: two-dimensional (2D) and three-dimensional (3D) graphics.

2D Graphics: are digital images that are computer-based.

They include 2D geometric models, such as image compositions, pixel art, digital art, photographs, and text.

2D graphics or computer generated images are used everyday on traditional printing and drawing.

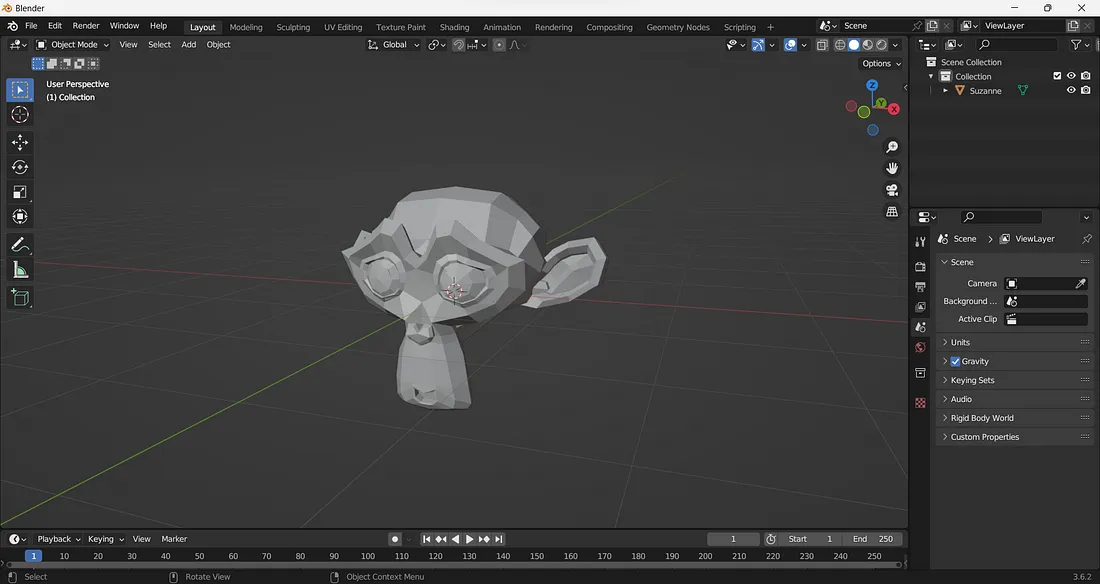

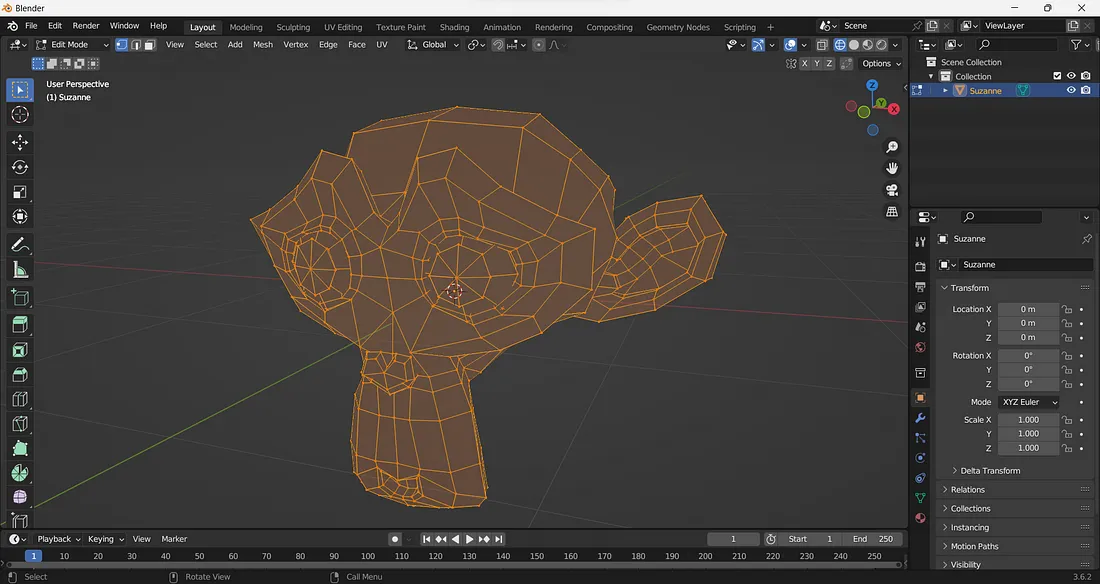

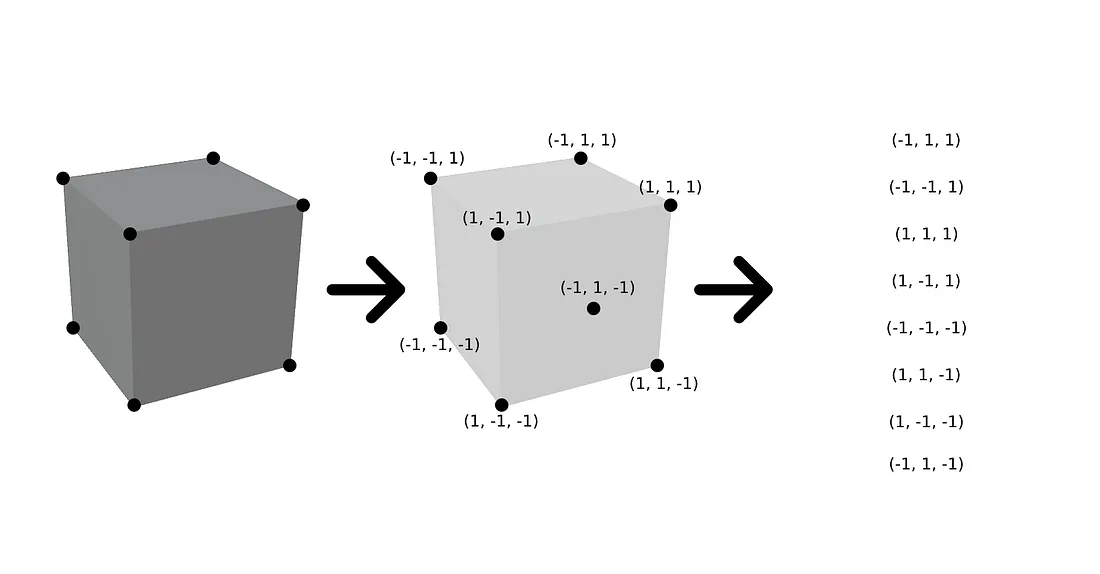

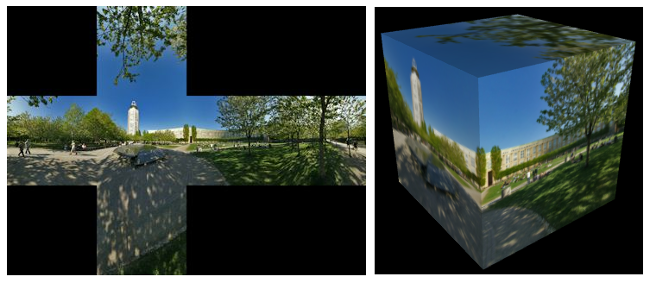

3D Graphics: are graphics that use 3D representation of geometric data.

This geometric data is then manipulated by computers via 3D computer graphics software in order to customize their display, movements, and appearance.

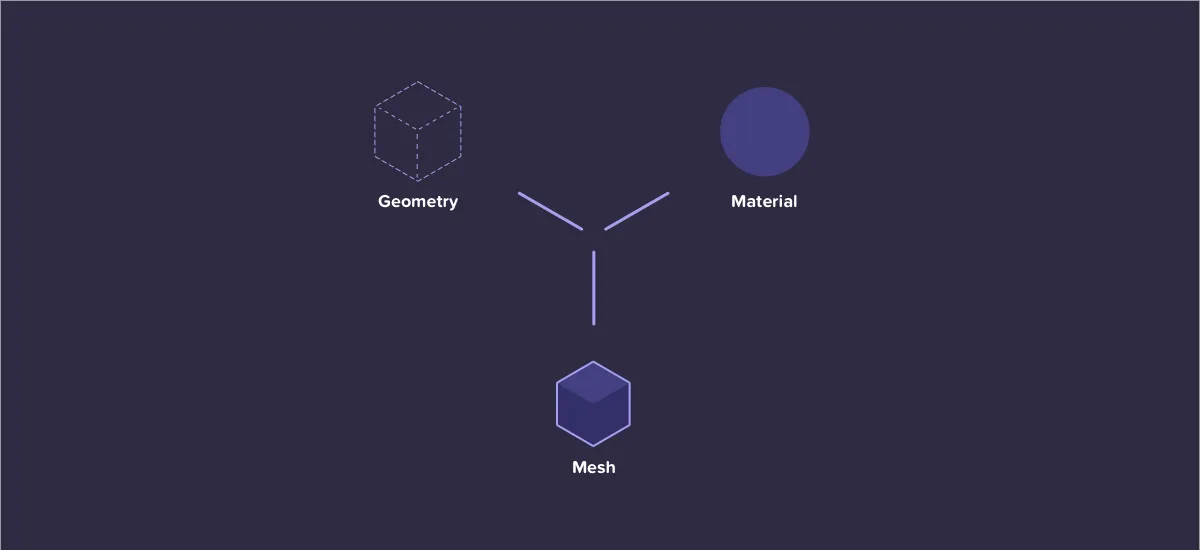

3D computer graphics are often referred to as 3d models. A 3d model is a mathematical representation of geometric data that is contained in a data file. 3D models, can be used for real-time 3D viewing in animations, videos, movies, training, simulations, architectural visualizations or for display as 2D rendered images (2D renders)

The development of computer graphics has been driven both by the needs of the user community and by advances in hardware and software. The applications of computer graphics are many and varied; we can, however, divide them into four major areas:

Although many applications span two or more of these areas, the development of the field was based on separate work in each.

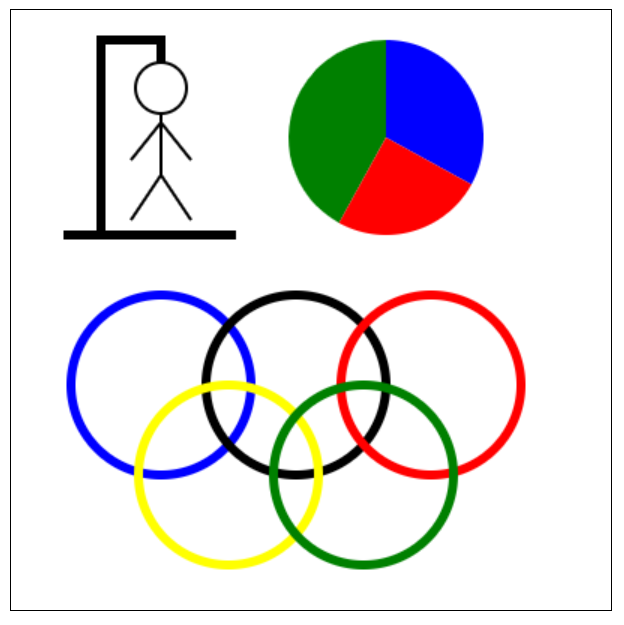

One of the most common uses of computer graphics is to display information in a pictorial or graphical form. This includes the generation of charts, graphs, and maps, as well as the visualization of scientific data. For example, medical imaging techniques such as MRI and CT scans use computer graphics to create detailed images of the human body.

Computer graphics is widely used in design and modeling applications, such as computer-aided design (CAD) for engineering and architectural design. It allows designers to create and manipulate 3D models of objects and structures, visualize designs from different angles, and simulate how they will look and function in the real world.

Computer graphics is also used to create realistic simulations and animations for various purposes, including entertainment, training, and scientific visualization. This includes the creation of 3D animations for movies and video games, as well as simulations for training pilots, surgeons, and other professionals.

Computer graphics plays a crucial role in the design of user interfaces for software applications. It allows developers to create visually appealing and intuitive interfaces that enhance the user experience. This includes the design of icons, buttons, menus, and other graphical elements that users interact with.

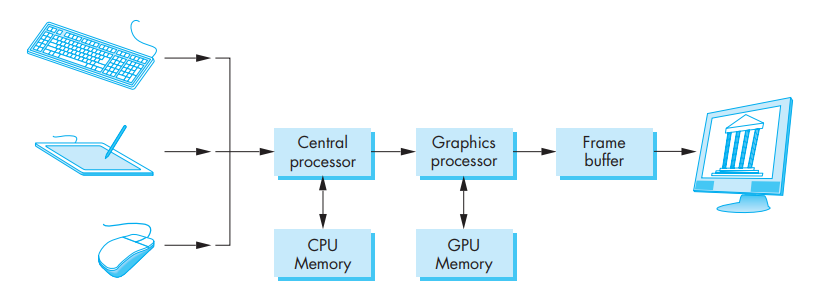

A computer graphics system is a computer system; as such, it must have all the components of a general-purpose computer system. There are six major elements in our system:

These components are shown in the figure below:

This model is general enough to include workstations and personal computers, interactive game systems, mobile phones, GPS systems, and sophisticated imagegeneration systems. Although most of the components are present in a standard computer, it is the way each element is specialized for computer graphics that characterizes this diagram as a portrait of a graphics system.

Input devices are used to capture data (and images) from the real world and convert them into a form that can be processed by the computer.

Most graphics systems provide a keyboard and at least one other input device. The most common input devices are the mouse, the joystick, and the data tablet. Each provides positional information to the system, and each usually is equipped with one or more buttons to provide signals to the processor. Often called pointing devices, these devices allow a user to indicate a particular location on the display.

Modern systems, such as game consoles, provide a much richer set of input devices, with new devices appearing almost weekly. In addition, there are devices which provide three- (and more) dimensional input. Consequently, we want to provide a flexible model for incorporating the input from such devices into our graphics programs

We can think about input devices in two distinct ways. The obvious one is to look at them as physical devices, such as a keyboard or a mouse, and to discuss how they work. Certainly, we need to know something about the physical properties of our input devices, so such a discussion is necessary if we are to obtain a full understanding of input. However, from the perspective of an application programmer, we should not need to know the details of a particular physical device to write an application program.

Rather, we prefer to treat input devices as logical devices whose properties are specified in terms of what they do from the perspective of the application program. A logical device is characterized by its high-level interface with the user program rather than by its physical characteristics.

Logical devices are familiar to all writers of highlevel programs. For example, data input and output in Java are done through classes such as System.out for output, PrintWriter for writing to files, and Scanner for input, whose methods use the standard Java data types. When we output a string using System.out.println or PrintWriter.println, the physical device on which the output appears could be a printer, a terminal, or a disk file. This output could even be the input to another program. The details of the format required by the destination device are of minor concern to the writer of the application program.

In computer graphics, the use of logical devices is slightly more complex because the forms that input can take are more varied than the strings of bits or characters to which we are usually restricted in nongraphical applications. For example, we can use the mouse—a physical device—either to select a location on the screen of our CRT or to indicate which item in a menu we wish to select. In the first case, an x, y pair (in some coordinate system) is returned to the user program; in the second, the application program may receive an integer as the identifier of an entry in the menu. The separation of physical from logical devices allows us to use the same physical devices in multiple markedly different logical ways. It also allows the same program to work, without modification, if the mouse is replaced by another physical device, such as a data tablet or trackball.

From the physical perspective, each input device has properties that make it more suitable for certain tasks than for others. We take the view used in most of the workstation literature that there are two primary types of physical devices: pointing devices and keyboard devices

The pointing device allows the user to indicate a position on the screen and almost always incorporates one or more buttons to allow the user to send signals or interrupts to the computer.

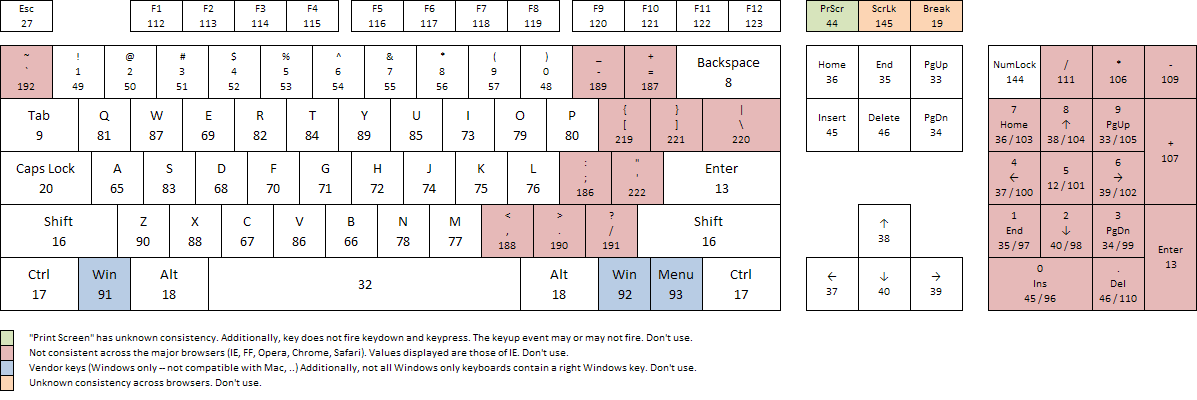

The keyboard device is almost always a physical keyboard but can be generalized to include any device that returns character codes. We use the American Standard Code for Information Interchange (ASCII) in our examples. ASCII assigns a single unsigned byte to each character. Nothing we do restricts us to this particular choice, other than that ASCII is the prevailing code used. Note, however, that other codes, especially those used for Internet applications, use multiple bytes for each character, thus allowing for a much richer set of supported characters.

The mouse and trackball are similar in use and often in construction as well. A typical mechanical mouse when turned over looks like a trackball. In both devices, the motion of the ball is converted to signals sent back to the computer by pairs of encoders inside the device that are turned by the motion of the ball. The encoders measure motion in two orthogonal directions

There are many variants of these devices. Some use optical detectors rather than mechanical detectors to measure motion. Small trackballs are popular with portable computers because they can be incorporated directly into the keyboard. There are also various pressure-sensitive devices used in keyboards that perform similar functions to the mouse and trackball but that do not move; their encoders measure the pressure exerted on a small knob that often is located between two keys in the middle of the keyboard

We can view the output of the mouse or trackball as two independent values provided by the device. These values can be considered as positions and converted— either within the graphics system or by the user program—to a two-dimensional location in a convenient coordinate system. If it is configured in this manner, we can use the device to position a marker (cursor) automatically on the display; however, we rarely use these devices in this direct manner.

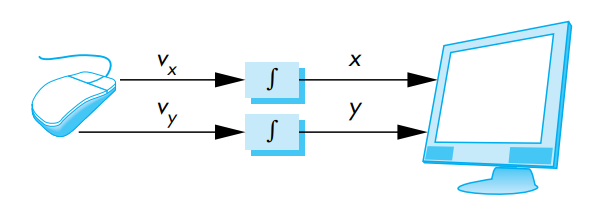

It is not necessary that the output of the mouse or trackball encoders be interpreted as a position. Instead, either the device driver or a user program can interpret the information from the encoder as two independent velocities. The computer can then integrate these values to obtain a two-dimensional position.

Thus, as a mouse moves across a surface, the integrals of the velocities yield x, y values that can be converted to indicate the position for a cursor on the screen, as shown below:

By interpreting the distance traveled by the ball as a velocity, we can use the device as a variable-sensitivity input device. Small deviations from rest cause slow or small changes; large deviations cause rapid large changes.

With either device, if the ball does not rotate, then there is no change in the integrals and a cursor tracking the position of the mouse will not move.

In this mode, these devices are relative-positioning devices because changes in the position of the ball yield a position in the user program; the absolute location of the ball (or the mouse) is not used by the application program.

Relative positioning, as provided by a mouse or trackball, is not always desirable.

In particular, these devices are not suitable for an operation such as tracing a diagram. If, while the user is attempting to follow a curve on the screen with a mouse, she lifts and moves the mouse, the absolute position on the curve being traced is lost.

Data tablets provide absolute positioning. A typical data tablet has rows and columns of wires embedded under its surface. The position of the stylus is determined through electromagnetic interactions between signals traveling through the wires and sensors in the stylus. Touch-sensitive transparent screens that can be placed over the face of a CRT have many of the same properties as the data tablet. Small, rectangular, pressure-sensitive touchpads are embedded in the keyboards of many portable computers. These touchpads can be configured as either relative- or absolute-positioning devices.

Two major characteristics describe the logical behavior of an input device: (1) the measurements that the device returns to the user program and (2) the time when the device returns those measurements.

The logical string device in Java is similar to using character input through Scanner or BufferedReader. A physical keyboard will return a string of characters to an application program; the same string might be provided from a file, or the user may see a virtual keyboard displayed on the output and use a pointing device to generate the string of characters. Logically, all three methods are examples of a string device, and application code for using such input can be the same regardless of which physical device is used.

The physical pointing device can be used in a variety of logical ways. As a locator it can provide a position to the application in either a device-independent coordinate system, such as world coordinates, as in OpenGL, or in screen coordinates, which the application can then transform to another coordinate system. A logical pick device returns the identifier of an object on the display to the application program. It is usually implemented with the same physical device as a locator but has a separate software interface to the user program.

A widget is a graphical interactive device, provided by either the window system or a toolkit. Typical widgets include menus, scrollbars, and graphical buttons. Most widgets are implemented as special types of windows. Widgets can be used to provide additional types of logical devices. For example, a menu provides one of a number of choices as may a row of graphical buttons. A logical valuator provides analog input to the user program, usually through a widget such as a slidebar, although the same logical input could be provided by a user typing numbers into a physical keyboard.

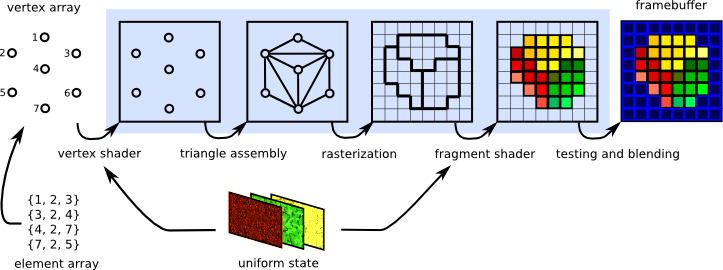

In a simple system, there may be only one processor, the central processing unit (CPU) of the system, which must do both the normal processing and the graphical processing. The main graphical function of the processor is to take specifications of graphical primitives (such as lines, circles, and polygons) generated by application programs and to assign values to the pixels in the frame buffer that best represent these entities.

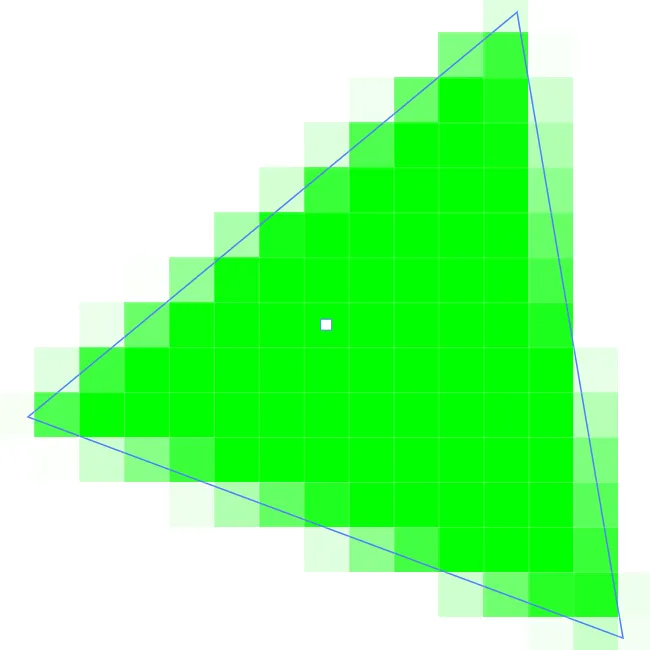

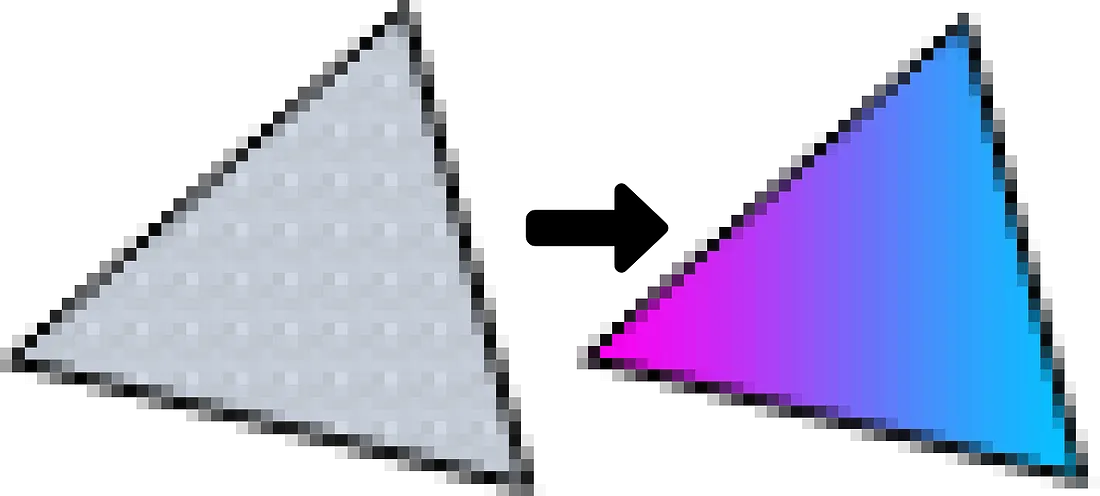

For example, a triangle is specified by its three vertices, but to display its outline by the three line segments connecting the vertices, the graphics system must generate a set of pixels that appear as line segments to the viewer. The conversion of geometric entities to pixel colors and locations in the frame buffer is known as rasterization, or scan conversion.

In early graphics systems, the frame buffer was part of the standard memory that could be directly addressed by the CPU. Today, virtually all graphics systems are characterized by special-purpose graphics processing units (GPUs), custom-tailored to carry out specific graphics functions. The GPU can be either on the mother board of the system or on a graphics card. The frame buffer is accessed through the graphics processing unit and usually is on the same circuit board as the GPU.

GPUs have evolved to where they are as complex or even more complex than CPUs. They are characterized by both special-purpose modules geared toward graphical operations and a high degree of parallelism—recent GPUs contain over 100 processing units, each of which is user programmable. GPUs are so powerful that they can often be used as mini supercomputers for general purpose computing.

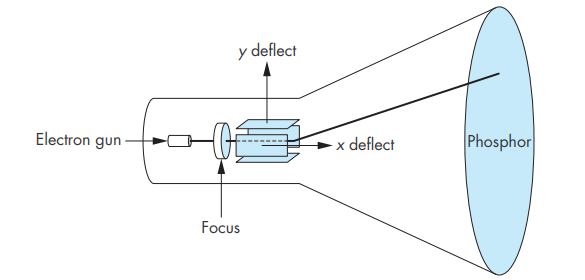

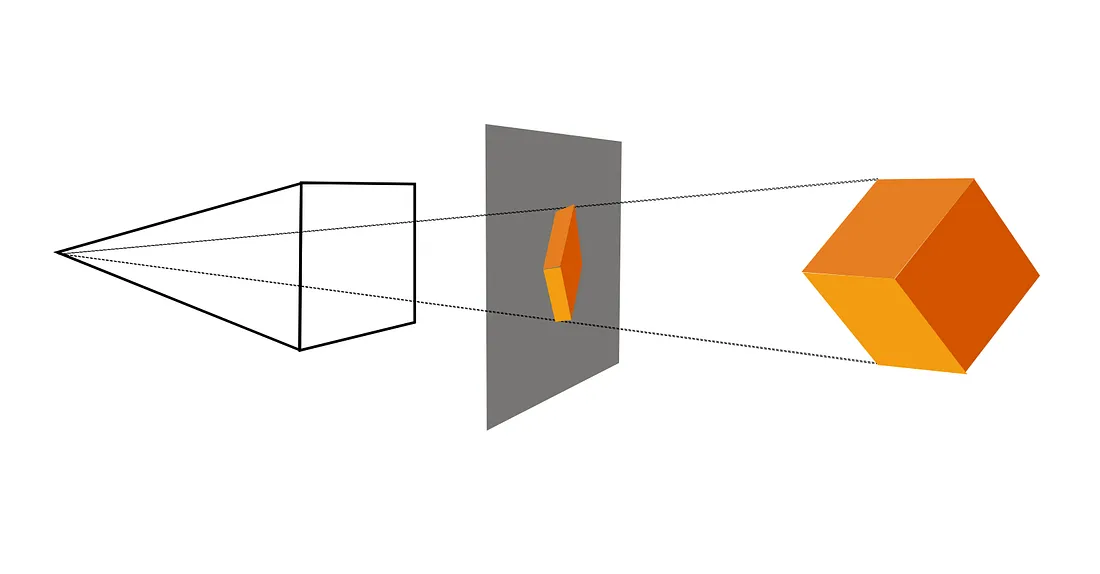

Until recently, the dominant type of display (or monitor) was the cathode-ray tube (CRT). A simplified picture of a CRT is shown below:

When electrons strike the phosphor coating on the tube, light is emitted. The direction of the beam is controlled by two pairs of deflection plates. The output of the computer is converted, by digitalto-analog converters, to voltages across the x and y deflection plates. Light appears on the surface of the CRT when a sufficiently intense beam of electrons is directed at the phosphor.

If the voltages steering the beam change at a constant rate, the beam will trace a straight line, visible to a viewer. Such a device is known as the random-scan, calligraphic, or vector CRT, because the beam can be moved directly from any position to any other position. If intensity of the beam is turned off, the beam can be moved to a new position without changing any visible display. This configuration was the basis of early graphics systems that predated the present raster technology

A typical CRT will emit light for only a short time—usually, a few milliseconds— after the phosphor is excited by the electron beam. For a human to see a steady, flicker-free image on most CRT displays, the same path must be retraced, or refreshed, by the beam at a sufficiently high rate, the refresh rate. In older systems, the refresh rate is determined by the frequency of the power system, 60 cycles per second or 60 Hertz (Hz) in the United States and 50 Hz in much of the rest of the world. Modern displays are no longer coupled to these low frequencies and operate at rates up to about 85 Hz.

In a raster system, the graphics system takes pixels from the frame buffer and displays them as points on the surface of the display in one of two fundamental ways.

In a noninterlaced system, the pixels are displayed row by row, or scan line by scan line, at the refresh rate.

In an interlaced display, odd rows and even rows are refreshed alternately. Interlaced displays are used in commercial television. In an interlaced display operating at 60 Hz, the screen is redrawn in its entirety only 30 times per second, although the visual system is tricked into thinking the refresh rate is 60 Hz rather than 30 Hz. Viewers located near the screen, however, can tell the difference between the interlaced and noninterlaced displays. Noninterlaced displays are becoming more widespread, even though these displays process pixels at twice the rate of the interlaced display

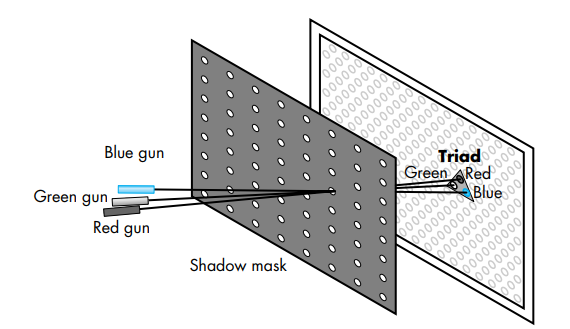

Color CRTs have three different colored phosphors (red, green, and blue), arranged in small groups. One common style arranges the phosphors in triangular groups called triads, each triad consisting of three phosphors, one of each primary. Most color CRTs have three electron beams, corresponding to the three types of phosphors.

In the shadow-mask CRT, a metal screen with small holes—the shadow mask—ensures that an electron beam excites only phosphors of the proper color:

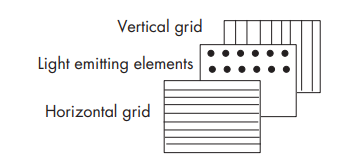

Although CRTs are still common display devices, they are rapidly being replaced by flat-screen technologies. Flat-panel monitors are inherently raster based. Although there are multiple technologies available, including light-emitting diodes (LEDs), liquid-crystal displays (LCDs), and plasma panels, all use a two-dimensional grid to address individual light-emitting elements.

The following shows a generic flat-panel monitor:

The two outside plates each contain parallel grids of wires that are oriented perpendicular to each other. By sending electrical signals to the proper wire in each grid, the electrical field at a location, determined by the intersection of two wires, can be made strong enough to control the corresponding element in the middle plate. The middle plate in an LED panel contains light-emitting diodes that can be turned on and off by the electrical signals sent to the grid. In an LCD display, the electrical field controls the polarization of the liquid crystals in the middle panel, thus turning on and off the light passing through the panel. A plasma panel uses the voltages on the grids to energize gases embedded between the glass panels holding the grids. The energized gas becomes a glowing plasma.

Most projection systems are also raster devices. These systems use a variety of technologies, including CRTs and digital light projection (DLP). From a user perspective, they act as standard monitors with similar resolutions and precisions. Hard-copy devices, such as printers and plotters, are also raster based but cannot be refreshed.

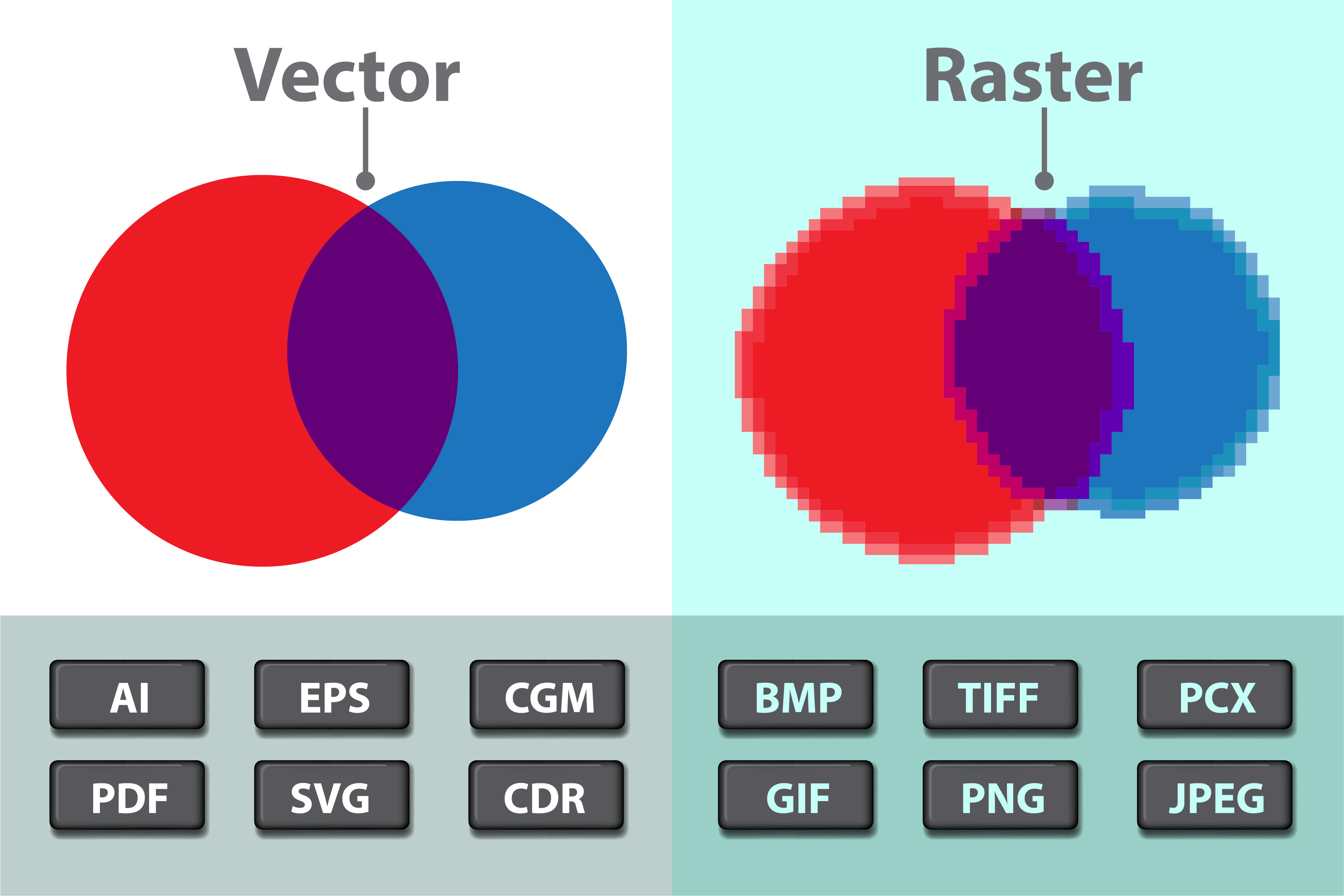

There are two kinds of 2D computer graphics: raster graphics and vector graphics.

Raster graphics, also known as bitmap graphics, are images that are made up of a grid of pixels.

The pixels are small enough that they are not easy to see individually. In fact, for many very high-resolution displays, they become essentially invisible. Each pixel in the grid has a specific color value, and together they form the complete image.

Modern screens typically use 24-bit color, where each color is defined by three 8-bit numbers representing the levels of red, green, and blue. These three primary colors combine to create any color displayed on the screen. Such systems are known as true-color, RGB-color, or full-color systems because each pixel's color is determined by the combination of red, green, and blue values.

Other formats are possible, such as grayscale, where each pixel is some shade of gray and the pixel color is given by one number that specifies the level of gray on a black-to-white scale. Typically, 256 shades of gray are used.

Early computer screens used indexed color, where only a small set of colors, usually 16 or 256, could be displayed. For an indexed color display, there is a numbered list of possible colors, and the color of a pixel is specified by an integer giving the position of the color in the list.

In any case, the color values for all the pixels on the screen are stored in a large block of memory known as a frame buffer. Changing the image on the screen requires changing color values that are stored in the frame buffer. The screen is redrawn many times per second, so that almost immediately after the color values are changed in the frame buffer, the colors of the pixels on the screen will be changed to match, and the displayed image will change.

In a very simple system, the frame buffer holds only the colored pixels that are displayed on the screen. In most systems, the frame buffer holds far more information, such as depth information needed for creating images from three-dimensional data. In these systems, the frame buffer comprises multiple buffers, one or more of which are color buffers that hold the colored pixels that are displayed. For now, we can use the terms frame buffer and color buffer synonymously without confusion.

A computer screen used in this way is the basic model of raster graphics. The term "raster" technically refers to the mechanism used on older vacuum tube computer monitors: An electron beam would move along the rows of pixels, making them glow. The beam was moved across the screen by powerful magnets that would deflect the path of the electrons. The stronger the beam, the brighter the glow of the pixel, so the brightness of the pixels could be controlled by modulating the intensity of the electron beam. The color values stored in the frame buffer were used to determine the intensity of the electron beam. (For a color screen, each pixel had a red dot, a green dot, and a blue dot, which were separately illuminated by the beam.)

Virtually all modern graphics systems are raster based. The image we see on the output device is an array—the raster—of picture elements, or pixels, produced by the graphics system.

Raster graphics are best suited for representing complex images with many colors and gradients, such as photographs and detailed illustrations.

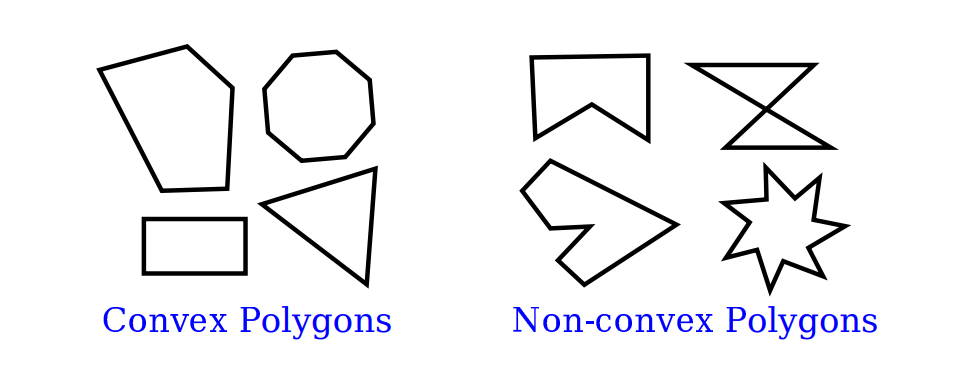

Although images on the computer screen are represented using pixels, specifying individual pixel colors is not always the best way to create an image. Another way is to specify the basic geometric objects that it contains, shapes such as lines, circles, triangles, and rectangles. This is the idea that defines vector graphics: Represent an image as a list of the geometric shapes that it contains.

To make things more interesting, the shapes can have attributes, such as the thickness of a line or the color that fills a rectangle. Of course, not every image can be composed from simple geometric shapes. This approach certainly wouldn't work for a picture of a beautiful sunset (or for most any other photographic image). However, it works well for many types of images, such as architectural blueprints and scientific illustrations.

In fact, early in the history of computing, vector graphics was even used directly on computer screens. When the first graphical computer displays were developed, raster displays were too slow and expensive to be practical. Fortunately, it was possible to use vacuum tube technology in another way: The electron beam could be made to directly draw a line on the screen, simply by sweeping the beam along that line. A vector graphics display would store a display list of lines that should appear on the screen. Since a point on the screen would glow only very briefly after being illuminated by the electron beam, the graphics display would go through the display list over and over, continually redrawing all the lines on the list. To change the image, it would only be necessary to change the contents of the display list. Of course, if the display list became too long, the image would start to flicker because a line would have a chance to visibly fade before its next turn to be redrawn

But here is the point: For an image that can be specified as a reasonably small number of geometric shapes, the amount of information needed to represent the image is much smaller using a vector representation than using a raster representation. Consider an image made up of one thousand line segments. For a vector representation of the image, We only need to store the coordinates of two thousand points, the endpoints of the lines. This would take up only a few kilobytes of memory. To store the image in a frame buffer for a raster display would require much more memory. Similarly, a vector display could draw the lines on the screen more quickly than a raster display could copy the same image from the frame buffer to the screen. (As soon as raster displays became fast and inexpensive, however, they quickly displaced vector displays because of their ability to display all types of images reasonably well.)

Unlike raster graphics, vector graphics are resolution-independent, meaning that they can be scaled to any size without losing quality. This is because instead of pixels, vector graphics use points, lines, and curves to represent elements. This allows for scalable graphics that can be resized without loss of quality.

Vector graphics are best suited for representing simple images with solid colors and sharp edges, such as logos and icons, and widely used in graphic design, architectural design, and illustration industries.

In summary, raster graphics are made up of pixels and are best suited for complex images with many colors, while vector graphics are made up of lines and curves and are best suited for simple images with solid colors.

The divide between raster graphics and vector graphics persists in several areas of computer graphics.

For example, it can be seen in a division between two categories of programs that can be used to create images: painting programs and drawing programs

In a painting program, the image is represented as a grid of pixels, and the user creates an image by assigning colors to pixels. This might be done by using a "drawing tool" that acts like a painter's brush, or even by tools that draw geometric shapes such as lines or rectangles. But the point in a painting program is to color the individual pixels, and it is only the pixel colors that are saved. To make this clearer, suppose that We use a painting program to draw a house, then draw a tree in front of the house. If We then erase the tree, We'll only reveal a blank background, not a house. In fact, the image never really contained a "house" at all—only individually colored pixels that the viewer might perceive as making up a picture of a house

In a drawing program, the user creates an image by adding geometric shapes, and the image is represented as a list of those shapes. If We place a house shape (or collection of shapes making up a house) in the image, and We then place a tree shape on top of the house, the house is still there, since it is stored in the list of shapes that the image contains. If We delete the tree, the house will still be in the image, just as it was before We added the tree. Furthermore, We should be able to select one of the shapes in the image and move it or change its size, so drawing programs offer a rich set of editing operations that are not possible in painting programs. (The reverse, however, is also true.)

A practical program for image creation and editing might combine elements of painting and drawing, although one or the other is usually dominant.

For example, a drawing program might allow the user to include a raster-type image, treating it as one shape. A painting program might let the user create “layers,” which are separate images that can be layered one on top of another to create the final image. The layers can then be manipulated much like the shapes in a drawing program (so that We could keep both our house and our tree in separate layers, even if in the image of the house is in back of the tree).

Two well-known graphics programs are Adobe Photoshop and Adobe Illustrator. Photoshop is in the category of painting programs, while Illustrator is more of a drawing program. In the world of free software, the GNU image-processing program, Gimp, is a good alternative to Photoshop, while Inkscape is a reasonably capable free drawing program

The divide between raster and vector graphics also appears in the field of graphics file formats. There are many ways to represent an image as data stored in a file. If the original image is to be recovered from the bits stored in the file, the representation must follow some exact, known specification.

Such a specification is called a graphics file format.

Some popular graphics file formats include GIF, PNG, JPEG, WebP, and SVG. Most images used on the Web are GIF, PNG, or JPEG, but most browsers also have support for SVG images and for the newer WebP format

GIF, PNG, JPEG, and WebP are raster graphics formats; an image is specified by storing a color value for each pixel.

JPEG (Joint Photographic Experts Group) allows up to 16 million colors and is best for images with many colors or color gradations, especially photographs. JPEG is a "lossy" format, meaning each time the image is saved and compressed, some image information is lost, degrading quality. JPEG images allow for various levels of compression.

Low compression means high image quality, but large file size. High compression means lower image quality, but smaller file size.

GIF (Graphics Interchange Format) is a "lossless" format, meaning image quality is not degraded through compression. However, GIFs are limited to a 256-color palette, making them suitable for simpler graphics with fewer colors. GIFs also support transparent backgrounds and simple animations.

PNG (Portable Network Graphics) combines features of both JPEG and GIF. PNG supports millions of colors and transparent backgrounds. It uses lossless compression, ensuring no quality loss. However, PNGs may not be supported by older web browsers.

WebP is a modern format that supports both lossless and lossy compression, providing a balance between image quality and file size.

The amount of data necessary to represent a raster image can be quite large. However, the data usually contains a lot of redundancy and can be compressed to reduce its size. GIF and PNG use lossless compression, meaning the original image can be perfectly recovered. JPEG uses lossy compression, which allows for greater reduction in file size but at the cost of some image quality. WebP supports both types of compression.

SVG, on the other hand, is fundamentally a vector graphics format (although SVG images can include raster images). SVG is actually an XML-based language for describing twodimensional vector graphics images.

"SVG" stands for "Scalable Vector Graphics" and the term "scalable" indicates one of the advantages of vector graphics: There is no loss of quality when the size of the image is increased. A line between two points can be represented at any scale, and it is still the same perfect geometric line. If We try to greatly increase the size of a raster image, on the other hand, We will find that We don't have enough color values for all the pixels in the new image; each pixel from the original image will be expanded to cover a rectangle of pixels in the scaled image, and We will get multi-pixel blocks of uniform color. The scalable nature of SVG images make them a good choice for web browsers and for graphical elements on our computer's desktop. And indeed, some desktop environments are now using SVG images for their desktop icons.

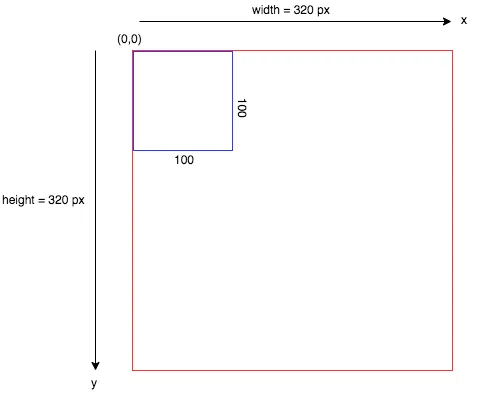

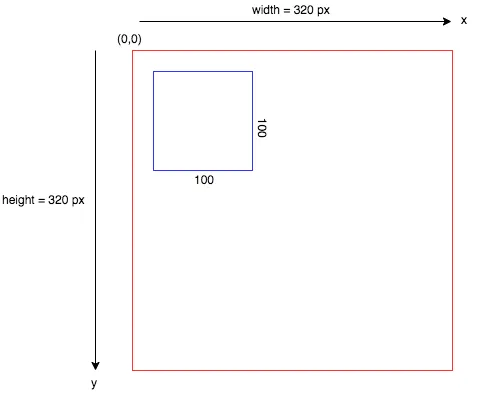

A digital image, no matter what its format, is specified using a coordinate system. A coordinate system sets up a correspondence between numbers and geometric points. In two dimensions, each point is assigned a pair of numbers, which are called the coordinates of the point. The two coordinates of a point are often called its x -coordinate and y-coordinate, although the names "x" and "y" are arbitrary.

A raster image is a two-dimensional grid of pixels arranged into rows and columns. As such, it has a natural coordinate system in which each pixel corresponds to a pair of integers giving the number of the row and the number of the column that contain the pixel. (Even in this simple case, there is some disagreement as to whether the rows should be numbered from top-to-bottom or from bottom-to-top.)

For a vector image, it is natural to use real-number coordinates. The coordinate system for an image is arbitrary to some degree; that is, the same image can be specified using different coordinate systems.

As previously mentioned, most images viewed online are raster-based. Raster images are created with pixel-based software or captured with a camera or scanner. They are more common in general such as jpg, gif, png, and are widely used on the web.

To create these two-dimensional images, each point in the image is assigned a color.

A point in 2D can be identified by a pair of numerical coordinates. Colors can also be specified numerically.

However, the assignment of numbers to points or colors is somewhat arbitrary. So we need to spend some time studying coordinate systems, which associate numbers to points, and color models, which associate numbers to colors.

A digital image is made up of rows and columns of pixels. A pixel in such an image can be specified by saying which column and which row contains it. In terms of coordinates, a pixel can be identified by a pair of integers giving the column number and the row number.

For example, the pixel with coordinates (3,5) would lie in column number 3 and row number 5.

Conventionally, columns are numbered from left to right, starting with zero. Most graphics systems (like HTML Canvas), number rows from top to bottom starting from zero.

Some, including OpenGL, number the rows from bottom to top instead.

Note in particular that the pixel that is identified by a pair of coordinates (x,y) depends on the choice of coordinate system. We always need to know what coordinate system is in use before We know what point We are talking about.

Row and column numbers identify a pixel, not a point. A pixel contains many points; mathematically, it contains an infinite number of points. The goal of computer graphics is not really to color pixels—it is to create and manipulate images. In some ideal sense, an image should be defined by specifying a color for each point, not just for each pixel. Pixels are an approximation. If we imagine that there is a true, ideal image that we want to display, then any image that we display by coloring pixels is an approximation. This has many implications.

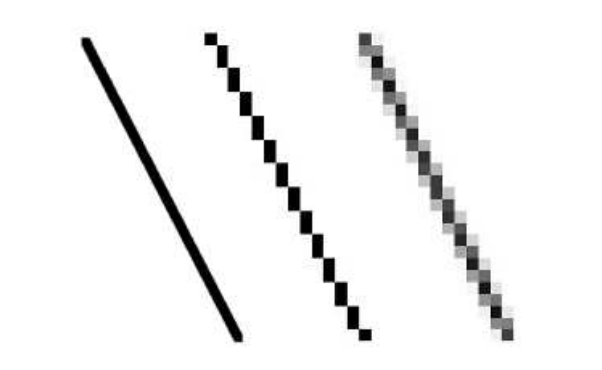

Suppose, for example, that we want to draw a line segment. A mathematical line has no thickness and would be invisible. So we really want to draw a thick line segment, with some specified width.

Let's say that the line should be one pixel wide.

The problem is that, unless the line is horizontal or vertical, we can't actually draw the line by coloring pixels. A diagonal geometric line will cover some pixels only partially. It is not possible to make part of a pixel black and part of it white. When We try to draw a line with black and white pixels only, the result is a jagged staircase effect.

This effect is an example of something called "aliasing".

Aliasing can also be seen in the outlines of characters drawn on the screen and in diagonal or curved boundaries between any two regions of different color. (The term aliasing likely comes from the fact that ideal images are naturally described in real-number coordinates. When We try to represent the image using pixels, many real-number coordinates will map to the same integer pixel coordinates; they can all be considered as different names or "aliases" for the same pixel.)

Anti-aliasing is a fundamental technique employed in graphics production that allows for smoother and more realistic images. This technology is used to reduce the jagged edges or "jaggies" that are commonly seen in computer-generated images, allowing them to appear as they would in real life.

It was presented by the Architecture Machine Group team, which later became known as the Media Lab, a laboratory engaged in research and development in the field of technology, science, art, design, and medicine, in 1972 at the Massachusetts Institute of Technology.

The idea is that when a pixel is only partially covered by a shape, the color of the pixel should be a mixture of the color of the shape and the color of the background. When drawing a black line on a white background, the color of a partially covered pixel would be gray, with the shade of gray depending on the fraction of the pixel that is covered by the line. (In practice, calculating this area exactly for each pixel would be too difficult, so some approximate method is used.)

At its core, anti-aliasing (also known as AA) is a method of manipulating pixels so that they appear smoother than they actually are. To achieve this effect, the software or hardware being used will sample adjacent pixels and create an average color value between them. This helps the image appear more natural and realistic since it blends together sharp pixel lines into one continuous line instead of several distinct pixelated lines.

So why does the "jagged" effect occur? Modern monitors and screens of mobile devices consist of quadrangular elements - pixels. This means that, in fact, only horizontal or vertical lines can be displayed in straight lines with clear boundaries. Angled curves are displayed as "steps". For example, the line in the picture below appears straight, but as We zoom in, it becomes clear that it is not.

Here, for example, is a geometric line, shown on the left, along with two approximations of that line made by coloring pixels. The lines are greatly magnified so that We can see the individual pixels. The line on the right is drawn using anti-aliasing, while the one in the middle is not:

Note that anti-aliasing does not give a perfect image, but it can reduce the "jaggies" that are caused by aliasing (at least when it is viewed on a normal scale).

Anyone who has played older games is familiar with the distinctive pixelated and blocky aesthetic. "Jaggedness" occurs due to the lack of smooth transitions between colors, and anti-aliasing helps to mitigate this issue.

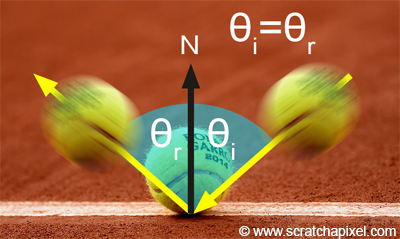

Jagged edges, or aliasing, occur when real-world objects with smooth, continuous curves are rasterized using pixels. This problem arises from undersampling, which happens when the sampling frequency is lower than the Nyquist Sampling Frequency, leading to a loss of information about the image.

Anti-aliasing works by sampling multiple points within and around each pixel, then calculating an average color value. This process effectively blurs the edges of objects, creating the illusion of smoother lines and reducing visible pixelation.

While anti-aliasing improves image quality, it also increases the load on the processor and graphics card, as they need to render additional shades and expend more power resources.

One way to reduce jagged edges is to increase the resolution, as higher resolution images have smaller pixels, making the blocky appearance less noticeable. However, resolution alone is not always sufficient, and software developers use various anti-aliasing techniques to further improve image quality.

There are essentially four methods of Anti-Aliasing:

Using a high-resolution display is one of the simplest methods of anti-aliasing. By increasing the resolution, more pixels can be used to represent the image, reducing the appearance of jagged edges. However, this method is limited by the physical resolution of the display and may not be practical for all applications.

Post-filtering, also known as supersampling, involves treating the screen as if it has a finer grid, effectively reducing the pixel size. The average intensity of each pixel is calculated from the intensities of subpixels, and the image is displayed at the screen resolution. This method is called post-filtering because it is done after generating the rasterized image.

Pre-filtering, or area sampling, calculates pixel intensities based on the areas of overlap between each pixel and the objects to be displayed. The final pixel color is an average of the colors of the overlapping areas. This method is called pre-filtering because it is done before generating the rasterized image.

Pixel phasing involves shifting pixel positions to approximate the positions near object geometry. Some systems allow the size of individual pixels to be adjusted to distribute intensities, which helps in pixel phasing.

Generally all anti-aliasing methods can be classified into two classifications:

Spatial anti-aliasing techniques work by sampling multiple points within each pixel and averaging the colors to reduce jagged edges.

Supersampling Anti-Aliasing (SSAA), also called full-scene anti-aliasing (FSAA), works by rendering the image at a higher resolution and then downsampling it to the display resolution. This method reduces jagged edges by averaging colors near the edges.

In this approach, a 512x512 image is first computed at higher resolution, such as 2048x2048, for example. It is then reduced through averaging or filtering to produce a 512x512 image.

While effective, SSAA is computationally intensive and can heavily load the GPU.

Multi-Sample Anti-Aliasing (MSAA) improves performance compared to SSAA by sampling multiple points within each pixel only at the edges of polygons.

Images are computed for 4 (or 8) subpixel sample points, followed by averaging. It is slow, since the frame rate is reduced by a factor of 4 (or 8). It works well for horizontal and vertical triangle edges. For other edge angles, the gaps between subpixels can cause narrow face breakups.

This method reduces the computational load while still providing good anti-aliasing quality.

Coverage Sampling Anti-Aliasing (CSAA) is an Nvidia-specific technique that improves upon MSAA by increasing the number of coverage samples without significantly increasing the number of color/depth samples. This method provides better edge quality with less performance impact.

Post-processing anti-aliasing techniques are applied after the image has been rendered to smooth out jagged edges.

Fast Approximate Anti-Aliasing (FXAA) is a post-processing technique, created by Timothy Lottes at Nvidia, that smooths edges by analyzing the final image and blending colors at the edges.

This is the cheapest and simplest smoothing algorithm.

In layman's terms, FXAA is applied to our final rendered image and works based on pixel data, not geometry. GPU's are particularly fast at executing these shader algorithms in parallel, thus it's very quick to render.

FXAA is less computationally intensive than SSAA and MSAA, making it suitable for real-time applications like video games.

Enhanced Subpixel Morphological Anti-Aliasing (SMAA) is a logical development of the FXAA algorithm. This post effect is used in post-processing the final image that combines edge detection and blending to reduce aliasing. SMAA provides high-quality anti-aliasing with a lower performance cost compared to SSAA and MSAA.

Temporal anti-aliasing techniques use information from previous frames to reduce aliasing in the current frame.

Temporal Anti-Aliasing (TAA) reduces aliasing by using information from previous frames to smooth edges in the current frame. TAA is effective at reducing flickering and shimmering in moving images, but it can introduce ghosting artifacts (visual distortions that appear in images due to a variety of factors, including movement, refraction, and sampling errors) if not implemented correctly.

There are other issues involved in mapping real-number coordinates to pixels.

For example, which point in a pixel should correspond to integer-valued coordinates such as (3,5)? The center of the pixel? One of the corners of the pixel? In general, we think of the numbers as referring to the top-left corner of the pixel.

Another way of thinking about this is to say that integer coordinates refer to the lines between pixels, rather than to the pixels themselves. But that still doesn't determine exactly which pixels are affected when a geometric shape is drawn.

For example, here are two lines drawn using HTML canvas graphics, shown greatly magnified. The lines were specified to be colored black with a one-pixel line width:

The top line was drawn from the point (100,100) to the point (120,100).

In canvas graphics, integer coordinates correspond to the lines between pixels, but when a one-pixel line is drawn, it extends one-half pixel on either side of the infinitely thin geometric line.

So for the top line, the line as it is drawn lies half in one row of pixels and half in another row. The graphics system, which uses anti-aliasing, rendered the line by coloring both rows of pixels gray.

The bottom line was drawn from the point (100.5,100.5) to (120.5,100.5). In this case, the line lies exactly along one line of pixels, which gets colored black. The gray pixels at the ends of the bottom line have to do with the fact that the line only extends halfway into the pixels at its endpoints. Other graphics systems might render the same lines differently

All this is complicated further by the fact that pixels aren't what they used to be. Pixels today are smaller!

The resolution of a display device can be measured in terms of the number of pixels per inch on the display, a quantity referred to as PPI (pixels per inch) or sometimes DPI (dots per inch).

While PPI (Pixels Per Inch) and DPI (Dots Per Inch) are often used interchangeably, they refer to different concepts and are used in different contexts.

PPI is a measure of the pixel density of a digital display, such as a computer monitor, smartphone screen, or television. It indicates the number of pixels present in one inch of the display. Higher PPI values mean more pixels are packed into each inch, resulting in sharper and more detailed images.

For example, a display with a resolution of 1920x1080 pixels and a diagonal size of 15.6 inches has a PPI of approximately 141. This means there are 141 pixels in each inch of the display.

DPI is a measure of the resolution of a printed image, indicating the number of individual dots of ink or toner that a printer can produce within one inch. Higher DPI values result in finer detail and smoother gradients in printed images.

For example, a printer with a resolution of 300 DPI can produce 300 dots of ink per inch, resulting in high-quality prints suitable for photographs and detailed graphics.

Both measures are important for ensuring high-quality visuals, but they apply to different mediums.

Early screens tended to have resolutions of somewhere close to 72 PPI. At that resolution, and at a typical viewing distance, individual pixels are clearly visible. For a while, it seemed like most displays had about 100 pixels per inch, but high resolution displays today can have 200, 300 or even 400 pixels per inch. At the highest resolutions, individual pixels can no longer be distinguished.

The fact that pixels come in such a range of sizes is a problem if we use coordinate systems based on pixels. An image created assuming that there are 100 pixels per inch will look tiny on a 400 PPI display. A one-pixel-wide line looks good at 100 PPI, but at 400 PPI, a one-pixel-wide line is probably too thin

In fact, in many graphics systems, "pixel" doesn't really refer to the size of a physical pixel. Instead, it is just another unit of measure, which is set by the system to be something appropriate. (On a desktop system, a pixel is usually about one one-hundredth of an inch. On a smart phone, which is usually viewed from a closer distance, the value might be closer to 1/160 inch. Furthermore, the meaning of a pixel as a unit of measure can change when, for example, the user applies a magnification to a web page.)

Pixels cause problems that have not been completely solved. Fortunately, they are less of a problem for vector graphics.

For vector graphics, pixels only become an issue during rasterization, the step in which a vector image is converted into pixels for display. The vector image itself can be created using any convenient coordinate system. It represents an idealized, resolution-independent image.

A rasterized image is an approximation of that ideal image, but how to do the approximation can be left to the display hardware.

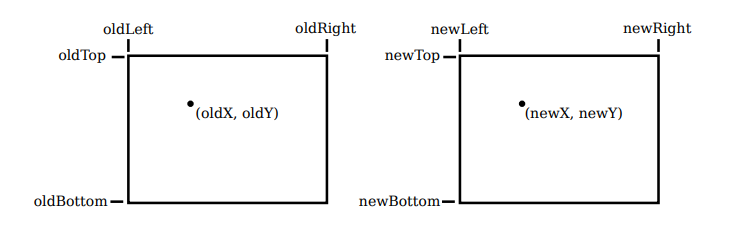

When doing 2D graphics, We are given a rectangle in which We want to draw some graphics primitives. Primitives are specified using some coordinate system on the rectangle. It should be possible to select a coordinate system that is appropriate for the application. For example, if the rectangle represents a floor plan for a 15 foot by 12 foot room, then We might want to use a coordinate system in which the unit of measure is one foot and the coordinates range from 0 to 15 in the horizontal direction and 0 to 12 in the vertical direction. The unit of measure in this case is feet rather than pixels, and one foot can correspond to many pixels in the image. The coordinates for a pixel will, in general, be real numbers rather than integers. In fact, it's better to forget about pixels and just think about points in the image. A point will have a pair of coordinates given by real numbers.

To specify the coordinate system on a rectangle, We just have to specify the horizontal coordinates for the left and right edges of the rectangle and the vertical coordinates for the top and bottom. Let's call these values left, right, top, and bottom. Often, they are thought of as xmin, xmax, ymin, and ymax, but there is no reason to assume that, for example, top is less than bottom. We might want a coordinate system in which the vertical coordinate increases from bottom to top instead of from top to bottom. In that case, top will correspond to the maximum y-value instead of the minimum value.

To allow programmers to specify the coordinate system that they would like to use, it would be good to have a subroutine such as:

setCoordinateSystem(left,right,bottom,top)

The graphics system would then be responsible for automatically transforming the coordinates from the specified coordinate system into pixel coordinates. Such a subroutine might not be available, so it's useful to see how the transformation is done by hand. Let's consider the general case. Given coordinates for a point in one coordinate system, we want to find the coordinates for the same point in a second coordinate system. (Remember that a coordinate system is just a way of assigning numbers to points. It's the points that are real!)

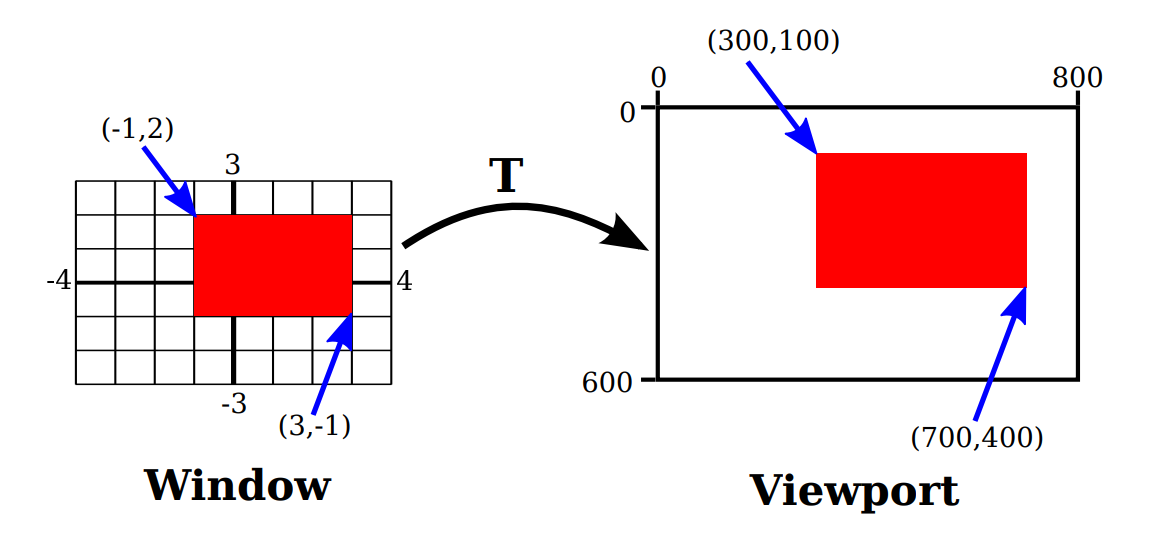

Suppose that the horizontal and vertical limits are oldLeft, oldRight, oldTop, and oldBottom for the first coordinate system, and are newLeft, newRight, newTop, and newBottom for the second. Suppose that a point has coordinates (oldX,oldY ) in the first coordinate system. We want to find the coordinates (newX,newY ) of the point in the second coordinate system

Formulas for newX and newY are then given by:

newX = newLeft + ((oldX - oldLeft) / (oldRight - oldLeft)) * (newRight - newLeft)

newY = newTop + ((oldY - oldTop) / (oldBottom - oldTop)) * (newBottom - newTop)

The logic here is that oldX is located at a certain fraction of the distance from oldLeft to oldRight. That fraction is given by:

(oldX - oldLeft) / (oldRight - oldLeft)

The formula for newX just says that newX should lie at the same fraction of the distance from newLeft to newRight. We can also check the formulas by testing that they work when oldX is equal to oldLeft or to oldRight, and when oldY is equal to oldBottom or to oldTop.

As an example, suppose that we want to transform some real-number coordinate system with limits left, right, top, and bottom into pixel coordinates that range from 0 at left to 800 at the right and from 0 at the top 600 at the bottom. In that case, newLeft and newTop are zero, and the formulas become simply:

newX = ((oldX - left) / (right - left)) * 800

newY = ((oldY - top) / (bottom - top)) * 600

Of course, this gives newX and newY as real numbers, and they will have to be rounded or truncated to integer values if we need integer coordinates for pixels. The reverse transformation—going from pixel coordinates to real number coordinates—is also useful.

For example, if the image is displayed on a computer screen, and We want to react to mouse clicks on the image, We will probably get the mouse coordinates in terms of integer pixel coordinates, but We will want to transform those pixel coordinates into our own chosen coordinate system.

In practice, though, We won't usually have to do the transformations Werself, since most graphics APIs provide some higher level way to specify transforms.

The aspect ratio of a rectangle is the ratio of its width to its height. For example an aspect ratio of 2:1 means that a rectangle is twice as wide as it is tall, and an aspect ratio of 4:3 means that the width is 4/3 times the height. Although aspect ratios are often written in the form width:height, I will use the term to refer to the fraction width/height. A square has aspect ratio equal to 1. A rectangle with aspect ratio 5/4 and height 600 has a width equal to 600*(5/4), or 750.

A coordinate system also has an aspect ratio. If the horizontal and vertical limits for the coordinate system are left, right, bottom, and top, as above, then the aspect ratio is the absolute value of:

(right - left) / (top - bottom)

If the coordinate system is used on a rectangle with the same aspect ratio, then when viewed in that rectangle, one unit in the horizontal direction will have the same apparent length as a unit in the vertical direction. If the aspect ratios don't match, then there will be some distortion.

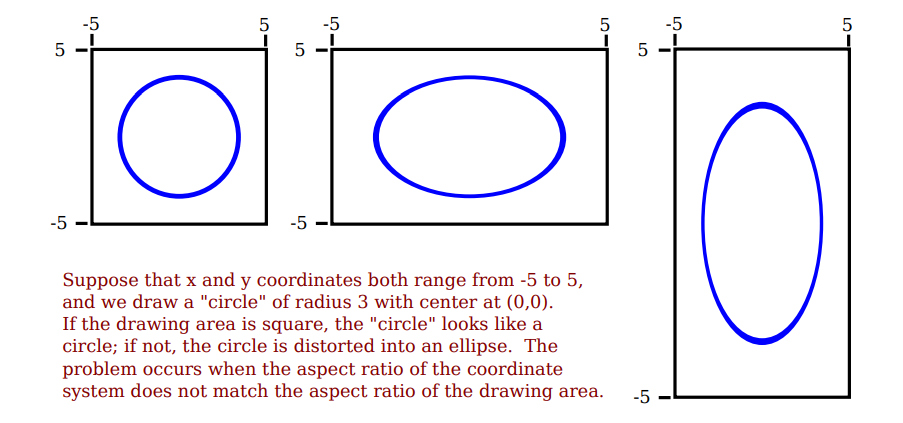

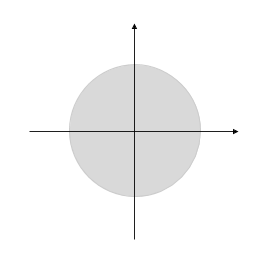

For example, the shape defined by the equation x^2 + y^2 = 9 should be a circle, but that will only be true if the aspect ratio of the (x,y) coordinate system matches the aspect ratio of the drawing area.

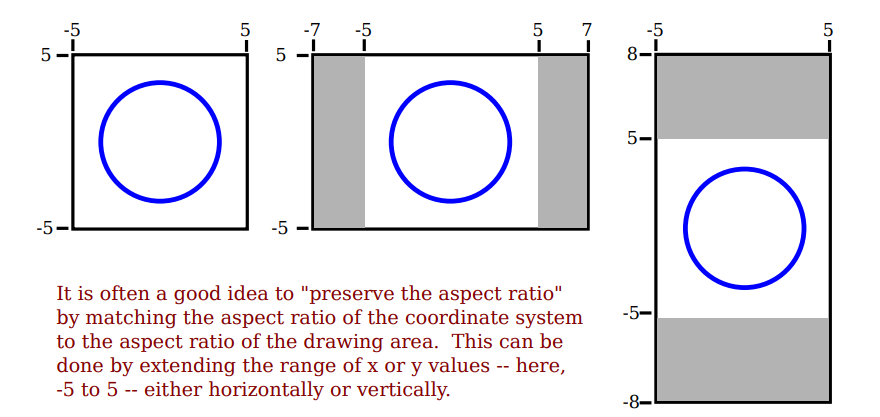

It is not always a bad thing to use different units of length in the vertical and horizontal directions. However, suppose that We want to use coordinates with limits left, right, bottom, and top, and that We do want to preserve the aspect ratio.

In that case, depending on the shape of the display rectangle, We might have to adjust the values either of left and right or of bottom and top to make the aspect ratios match:

We are talking about the most basic foundations of computer graphics. One of those is coordinate systems. The other is color.

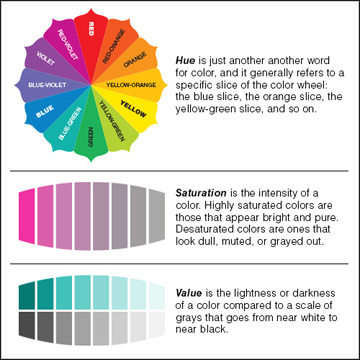

Red, Yellow, and Blue — Primary colors. Or at least, that's what we have been told since kindergarten, isn't it? But there is more to it.

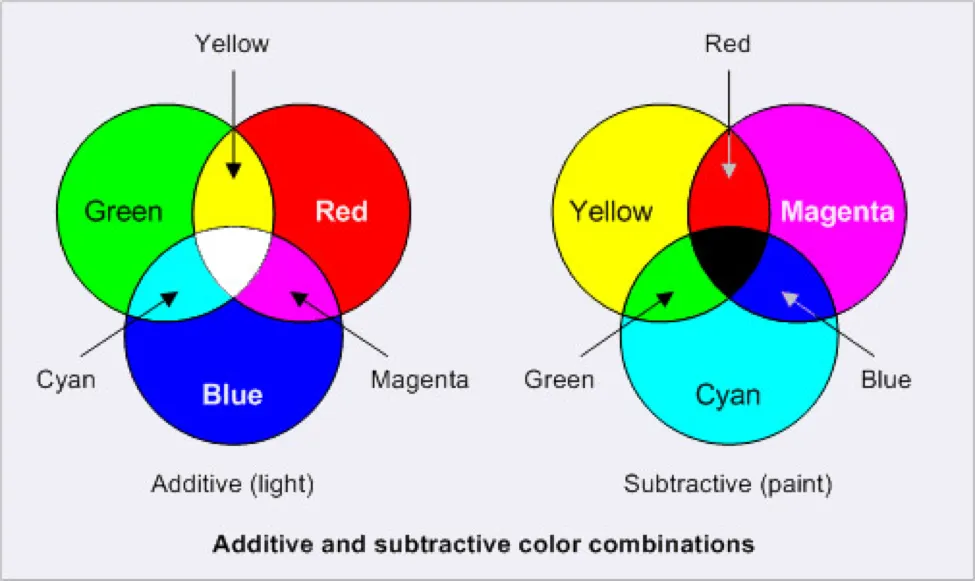

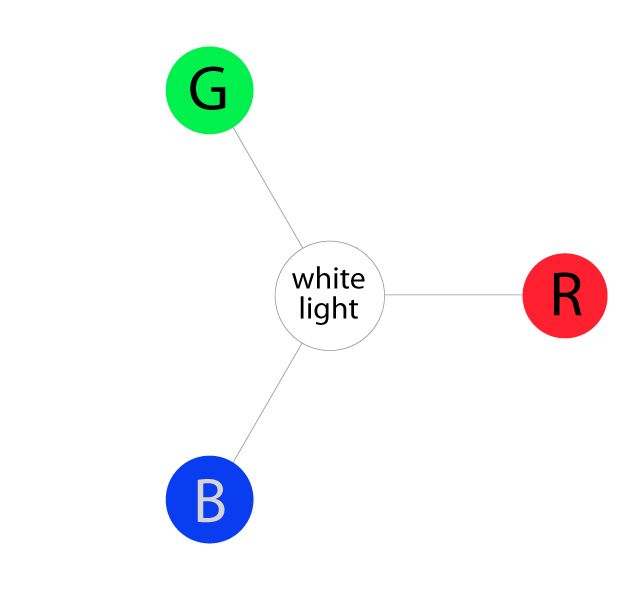

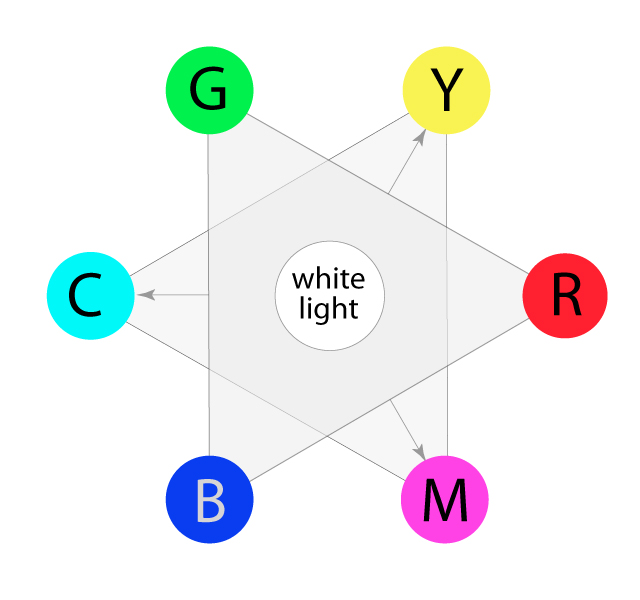

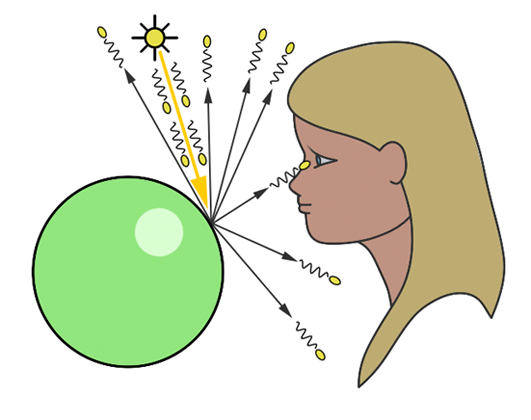

The colors on a computer screen are produced as combinations of red, green, and blue light.

Now the question is — if RYB is the primary color set then why do computers use RGB instead?

Going deep into the line, we first need to understand the color theory.

There are two different theories:

Different colors are produced by varying the intensity of each type of light. A color can be specified by three numbers giving the intensity of red, green, and blue in the color. Intensity can be specified as a number in the range zero, for minimum intensity, to one, for maximum intensity.

The additive is the case of the projection of one or more colored lights (wavelengths). These are the colors when mixed produce more light.

This method of specifying color is called the RGB color model, where RGB stands for Red/Green/Blue.

The red, green, and blue values for a color are called the color components of that color in the RGB color model and when mixed produces lighter colors, resulting in white light at the end. That's how our computer, TV, and other light-emitting screen works.

Each parameter (red, green, and blue) defines the intensity of the color with a value between 0 and 255.

This means that there are 256 x 256 x 256 = 16777216 possible colors!

For example, rgb(255, 0, 0) is displayed as red, because red is set to its highest value (255), and the other two (green and blue) are set to 0.

Another example, rgb(0, 255, 0) is displayed as green, because green is set to its highest value (255), and the other two (red and blue) are set to 0.

To display black, set all color parameters to 0, like this: rgb(0, 0, 0).

To display white, set all color parameters to 255, like this: rgb(255, 255, 255).

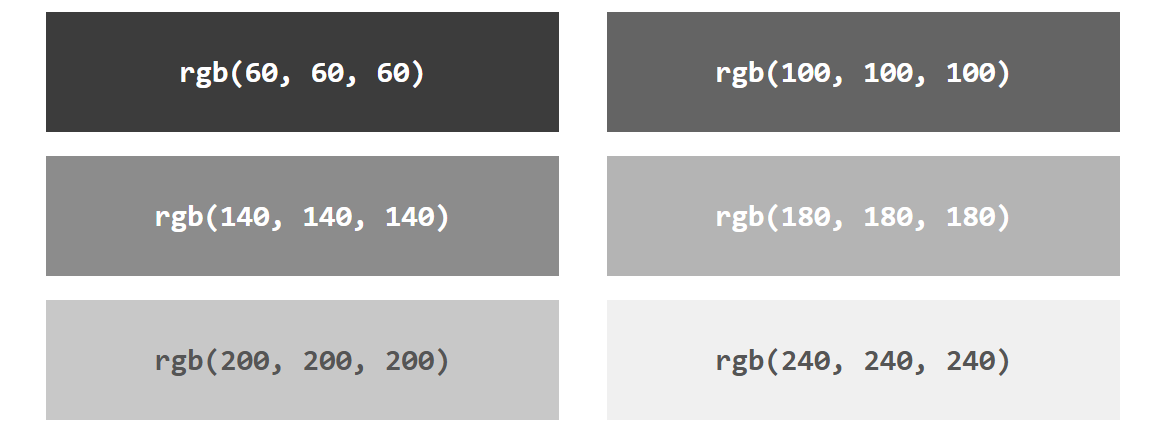

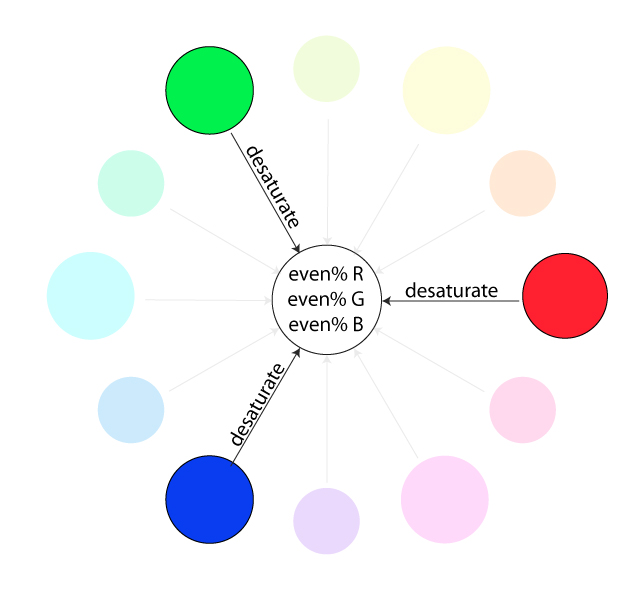

Shades of gray are often defined using equal values for all three parameters:

Light is made up of waves with a variety of wavelengths. A pure color is one for which all the light has the same wavelength, but in general, a color can contain many wavelengths— mathematically, an infinite number. How then can we represent all colors by combining just red, green, and blue light? In fact, we can't quite do that.

We might have heard that combinations of the three basic, or "primary" colors are sufficient to represent all colors, because the human eye has three kinds of color sensors that detect red, green, and blue light. However, that is only an approximation. The eye does contain three kinds of color sensors. The sensors are called "cone cells."

However, cone cells do not respond exclusively to red, green, and blue light. Each kind of cone cell responds, to a varying degree, to wavelengths of light in a wide range. A given mix of wavelengths will stimulate each type of cell to a certain degree, and the intensity of stimulation determines the color that we see. A different mixture of wavelengths that stimulates each type of cone cell to the same extent will be perceived as the same color.

So a perceived color can, in fact, be specified by three numbers giving the intensity of stimulation of the three types of cone cell. However, it is not possible to produce all possible patterns of stimulation by combining just three basic colors, no matter how those colors are chosen. This is just a fact about the way our eyes actually work; it might have been different.

Three basic colors can produce a reasonably large fraction of the set of perceivable colors, but there are colors that We can see in the world that We will never see on our computer screen. (This whole discussion only applies to people who actually have three kinds of cone cell. Color blindness, where someone is missing one or more kinds of cone cell, is surprisingly common.)

The range of colors that can be produced by a device such as a computer screen is called the color gamut of that device. Different computer screens can have different color gamuts, and the same RGB values can produce somewhat different colors on different screens. The color gamut of a color printer is noticeably different—and probably smaller—than the color gamut of a screen, which explains why a printed image probably doesn't look exactly the same as it did on the screen.

When we mix paints or inks, subtractive mixing results. Paints or inks are non-emissive objects here. They reflect when light falls on them. Molecules of paint absorb some of the wavelengths of light and reflect rest. That's how we see such objects.

Printers, by the way, make colors differently from the way a screen does it. Whereas a screen combines light to make a color, a printer combines inks or dyes. Because of this difference, colors meant for printers are often expressed using a different set of basic colors.

The primary colors of the subtractive mix are CMYK — Cyan, Magenta, Yellow, and K which stands for black ( To distinguish it from B for Blue. Just a convention.)

When the CMY (not K) gets mixed, it produces brownish color — a bit muddy. To get the more blackish color, the additional K for black is used. CMYK — the model used by printers & publishing houses.

In any case, the most common color model for computer graphics is RGB. RGB colors are most often represented using 8 bits per color component, a total of 24 bits to represent a color. This representation is sometimes called "24-bit color."" An 8-bit number can represent 28, or 256, different values, which we can take to be the positive integers from 0 to 255. A color is then specified as a triple of integers (r,g,b) in that range.

This representation works well because 256 shades of red, green, and blue are about as many as the eye can distinguish. In applications where images are processed by computing with color components, it is common to use additional bits per color component to avoid visual effects that might occur due to rounding errors in the computations. Such applications might use a 16-bit integer or even a 32-bit floating point value for each color component. On the other hand, sometimes fewer bits are used.

For example, one common color scheme uses 5 bits for the red and blue components and 6 bits for the green component, for a total of 16 bits for a color. (Green gets an extra bit because the eye is more sensitive to green light than to red or blue.) This “16-bit color” saves memory compared to 24-bit color and was more common when memory was more expensive.

There are many other color models besides RGB. RGB is sometimes criticized as being unintuitive. For example, it's not obvious to most people that yellow is made of a combination of red and green.

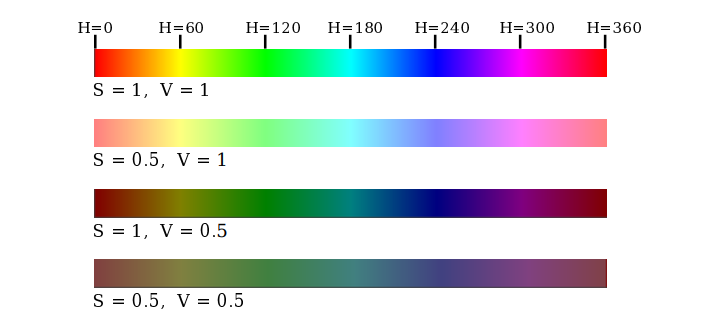

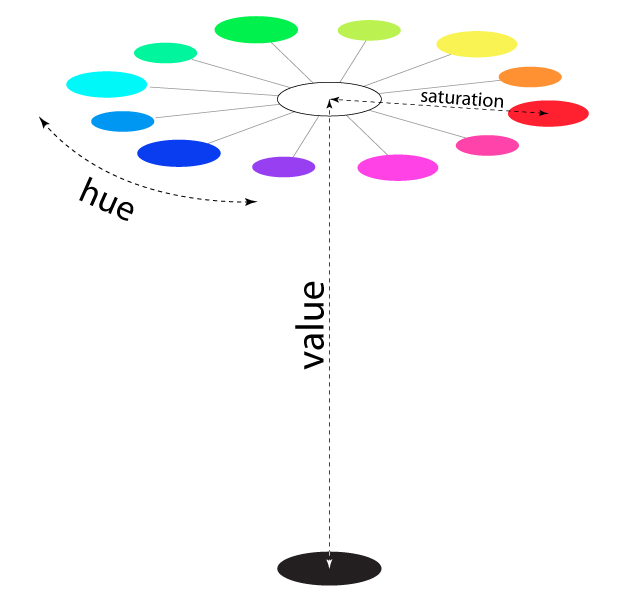

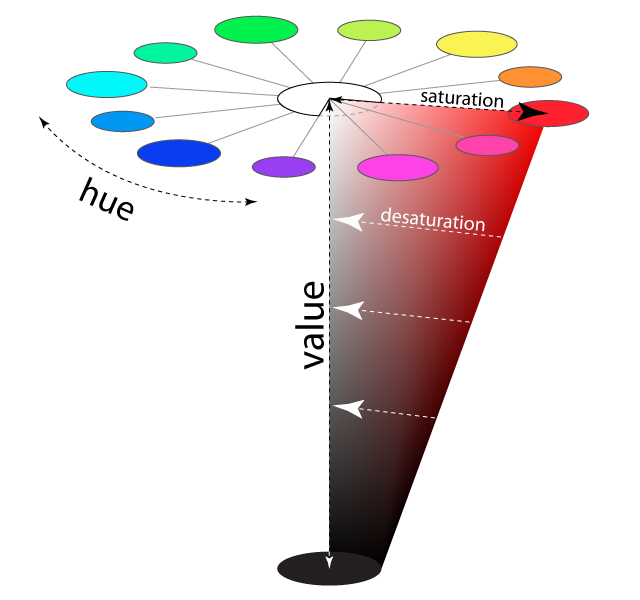

The closely related color models HSV and HSL describe the same set of colors as RGB, but attempt to do it in a more intuitive way. (HSV is sometimes called HSB, with the "B" standing for "brightness" HSV and HSB are exactly the same model.)

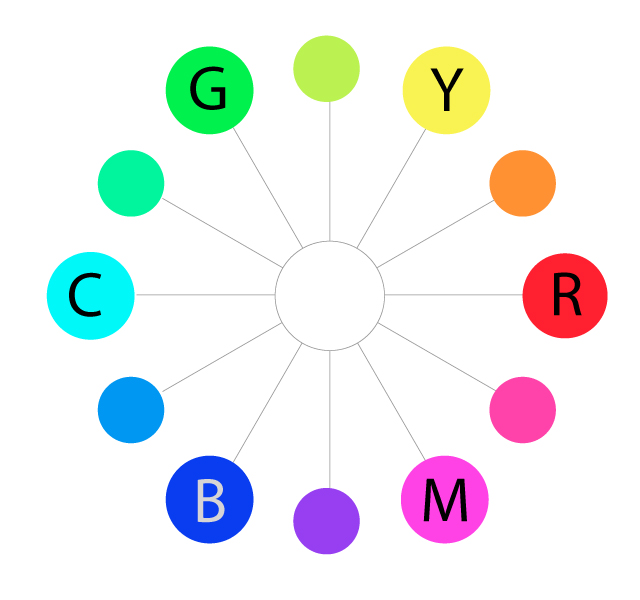

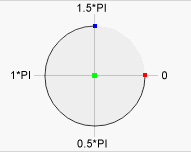

The "H" in these models stands for "hue", a basic spectral color. As H increases, the color changes from red to yellow to green to cyan to blue to magenta, and then back to red. The value of H is often taken to range from 0 to 360, since the colors can be thought of as arranged around a circle with red at both 0 and 360 degrees.

The "S" in HSV and HSL stands for "saturation" and is taken to range from 0 to 1. A saturation of 0 gives a shade of gray (the shade depending on the value of V or L). A saturation of 1 gives a "pure color" and decreasing the saturation is like adding more gray to the color.

"V" stands for "value" and "L" stands for "lightness". They determine how bright or dark the color is. The main difference is that in the HSV model, the pure spectral colors occur for V=1, while in HSL, they occur for L=0.5.

Let's look at some colors in the HSV color model. The illustration below shows colors with a full range of H-values, for S and V equal to 1 and to 0.5. Note that for S=V=1, We get bright, pure colors. S=0.5 gives paler, less saturated colors. V=0.5 gives darker colors.

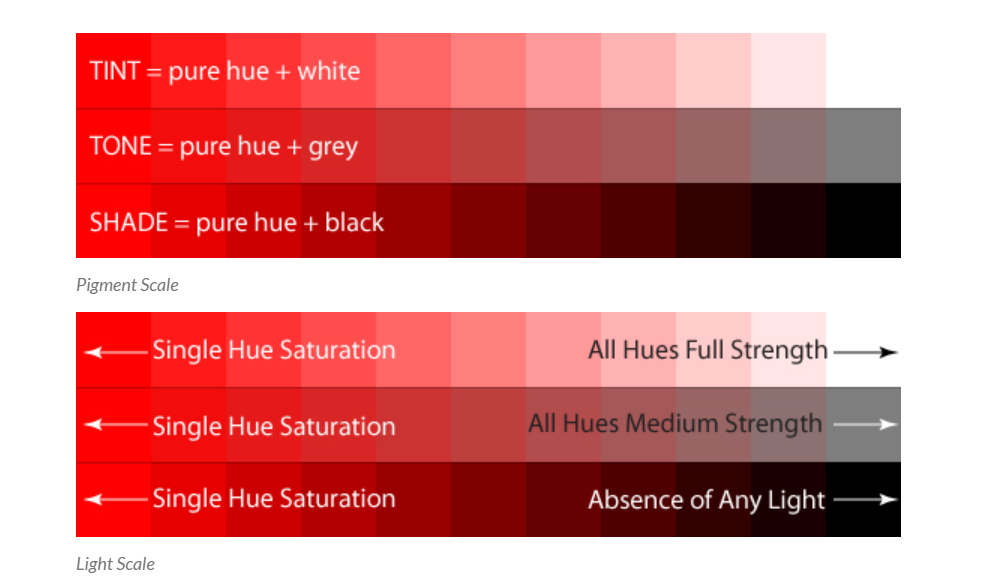

In the simple scale diagrams below, the first model indicates amount of black, white, or grey pigment added to the hue. The second model illustrates the same scale but explains the phenomenon based on light [spectral] properties.

Regardless of the two Additive and Subtractive color models, all color is a result of how our eyes physically process light waves. So let's start with the light Additive model to see how it filters into the Subtractive model and to see how hues, values and saturation interact to produce unique colors.

The three primary hues in light are red, green, and blue. Thus, that is why televisions, computer monitors, and other full-range, electronic color visual displays use a triad of red, green, and blue phosphors to produce all electronically communicated color.

As we mentioned before, in light, all three of these wavelengths added together at full strength produces pure white light. The absence of all three of these colors produces complete darkness, or black.

Although additive and subtractive color models are considered their own unique entities for screen vs. print purposes, the hues CMY do not exist in a vacuum.

They are produced as secondary colors when RGB light hues are mixed, as follows:

The colors on the outermost perimeter of the color circle are the "hues", which are colors in their purest form. This process can continue filling in colors around the wheel. The next level colors, the tertiary colors, are those colors between the secondary and primary colors.

Saturation is also referred to as "intensity" and "chroma". It refers to the dominance of hue in the color. On the outer edge of the hue wheel are the 'pure' hues. As We move into the center of the wheel, the hue we are using to describe the color dominates less and less. When We reach the center of the wheel, no hue dominates. These colors directly on the central axis are considered desaturated.

Naturally, the opposite of the image above is to saturate color.

The first example below describes the general direction color must move on the color circle to become more saturated (towards the outside). The second example depicts how a single color looks completely saturated, having no other hues present in the color.

Now let's add "value" to the HSV scale. Value is the dimension of lightness/darkness. In terms of a spectral definition of color, value describes the overall intensity or strength of the light. If hue can be thought of as a dimension going around a wheel, then value is a linear axis running through the middle of the wheel, as seen below:

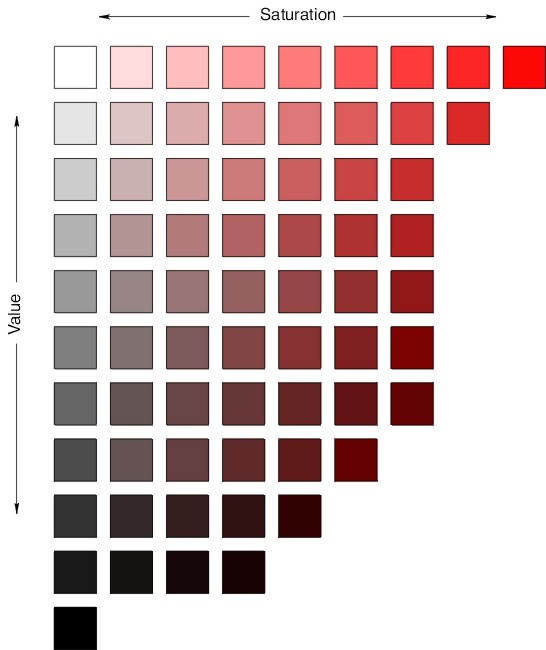

To better visualize even more, look at the example below showing a full color range for a single hue:

Now, if We imagine that each hue was also represented as a slice like the one above, we would have a solid, upside-down cone of colors. The example above can be considered a slice of the cone. Notice how the right-most edge of this cone slice shows the greatest amount of the dominant red hue (least amount of other competing hues), and how as We go down vertically, it gets darker in "value".

Also notice that as we travel from right to left in the cone, the hue becomes less dominant and eventually becomes completely desaturated along the vertical center of the cone. This vertical center axis of complete desaturation is referred to as grayscale.

See how this slice below translates into some isolated color swatches:

Often, a fourth component is added to color models. The fourth component is called alpha, and color models that use it are referred to by names such as RGBA and HSLA. Alpha is not a color as such. It is usually used to represent transparency.

A color with maximal alpha value is fully opaque; that is, it is not at all transparent. A color with alpha equal to zero is completely transparent and therefore invisible. Intermediate values give translucent, or partly transparent, colors.

Transparency determines what happens when We draw with one color (the foreground color) on top of another color (the background color). If the foreground color is fully opaque, it simply replaces the background color. If the foreground color is partly transparent, then it is blended with the background color.

Assuming that the alpha component ranges from 0 to 1, the color that We get can be computed as:

new_color = (alpha)*(foreground_color) + (1 - alpha)*(background_color)

This computation is done separately for the red, blue, and green color components. This is called alpha blending. The effect is like viewing the background through colored glass; the color of the glass adds a tint to the background color. This type of blending is not the only possible use of the alpha component, but it is the most common.

An RGBA color model with 8 bits per component uses a total of 32 bits to represent a color. This is a convenient number because integer values are often represented using 32-bit values. A 32-bit integer value can be interpreted as a 32-bit RGBA color.

How the color components are arranged within a 32-bit integer is somewhat arbitrary.

The most common layout is to store the alpha component in the eight high-order bits, followed by red, green, and blue. (This should probably be called ARGB color.) However, other layouts are also in use.

We have been talking about low-level graphics concepts like pixels and coordinates, but fortunately we don't usually have to work on the lowest levels. Most graphics systems let us work with higher-level shapes, such as triangles and circles, rather than individual pixels.

In a graphics API, there will be certain basic shapes that can be drawn with one command, whereas more complex shapes will require multiple commands. Exactly what qualifies as a basic shape varies from one API to another.

For example, the HTML5 canvas API provides commands to draw rectangles, circles, and lines, but not triangles. To draw a triangle, We have to draw three lines.

By "line", we really mean line segment, that is a straight line segment connecting two given points in the plane. A simple one-pixel-wide line segment, without anti-aliasing, is the most basic shape. It can be drawn by coloring pixels that lie along the infinitely thin geometric line segment.

An algorithm for drawing the line has to decide exactly which pixels to color. One of the first computer graphics algorithms, Bresenham's algorithm for line drawing, implements a very efficient procedure for doing so.

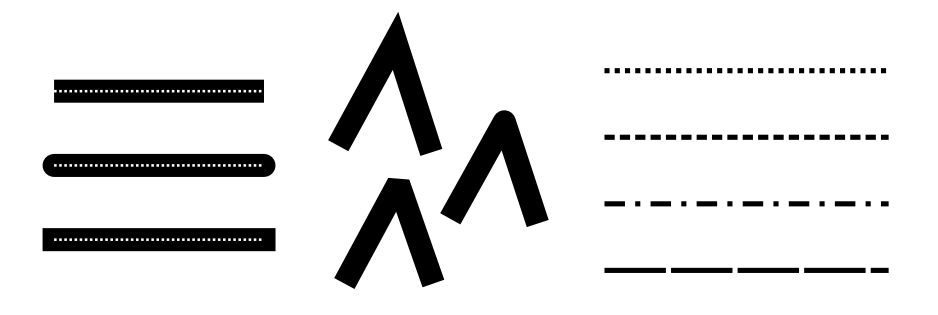

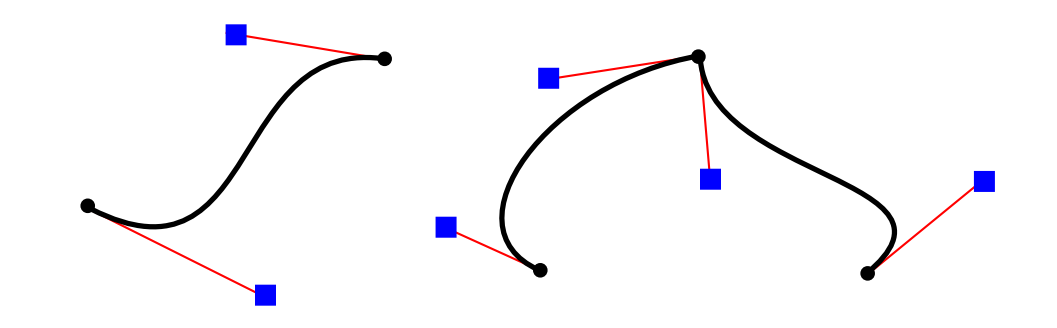

In any case, lines are typically more complicated. Anti-aliasing is one complication. Line width is another. A wide line might actually be drawn as a rectangle.

Lines can have other attributes, or properties, that affect their appearance. One question is, what should happen at the end of a wide line?

Appearance might be improved by adding a rounded "cap" on the ends of the line. A square cap—that is, extending the line by half of the line width—might also make sense.

Another question is, when two lines meet as part of a larger shape, how should the lines be joined? And many graphics systems support lines that are patterns of dashes and dots.

This illustration shows some of the possibilities:

On the left are three wide lines with no cap, a round cap, and a square cap. The geometric line segment is shown as a dotted line. (The no-cap style is called “butt.”) To the right are four lines with different patterns of dots and dashes. In the middle are three different styles of line joins: mitered, rounded, and beveled.

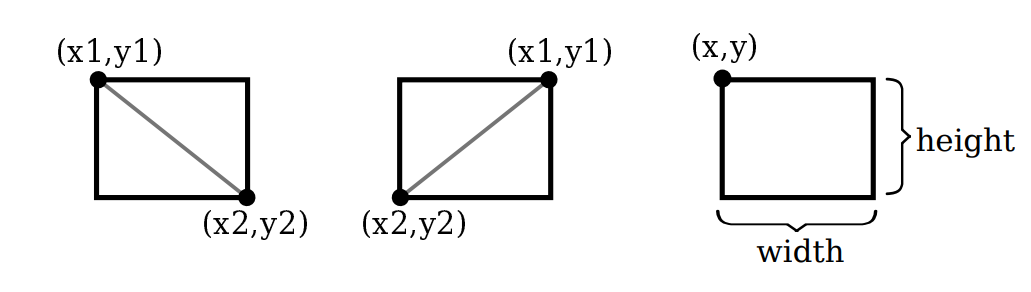

The basic rectangular shape has sides that are vertical and horizontal. (A tilted rectangle generally has to be made by applying a rotation.) Such a rectangle can be specified with two points, (x1,y1) and (x2,y2), that give the endpoints of one of the diagonals of the rectangle. Alternatively, the width and the height can be given, along with a single base point, (x,y). In that case, the width and height have to be positive, or the rectangle is empty. The base point (x,y) will be the upper left corner of the rectangle if y increases from top to bottom, and it will be the lower left corner of the rectangle if y increases from bottom to top.

Suppose that We are given points (x1,y1) and (x2,y2), and that We want to draw the rectangle that they determine. And suppose that the only rectangle-drawing command that We have available is one that requires a point (x,y), a width, and a height. For that command, x must be the smaller of x1 and x2, and the width can be computed as the absolute value of x1 minus x2. And similarly for y and the height.

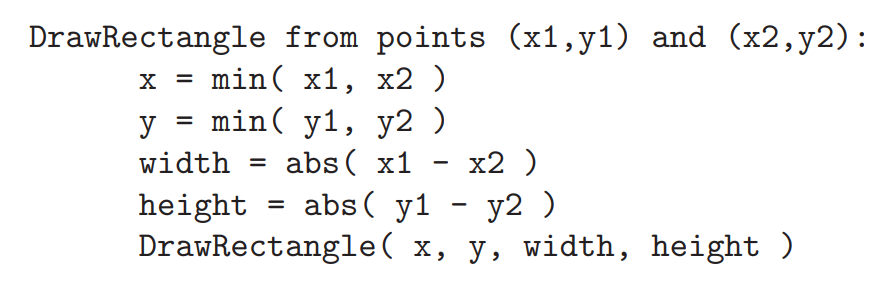

In pseudocode,

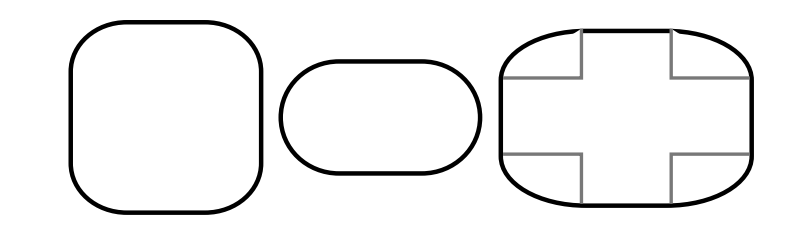

A common variation on rectangles is to allow rounded corners. For a “round rect,” the corners are replaced by elliptical arcs. The degree of rounding can be specified by giving the horizontal radius and vertical radius of the ellipse.

Here are some examples of round rects. For the shape at the right, the two radii of the ellipse are shown:

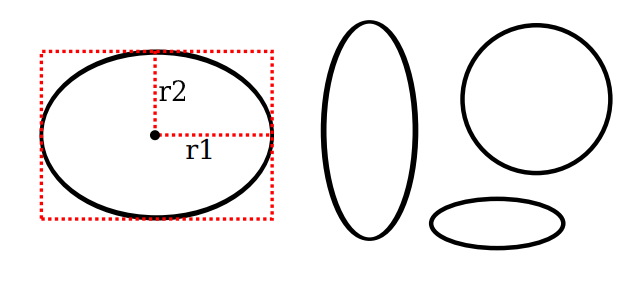

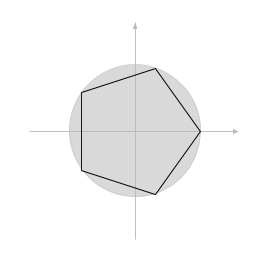

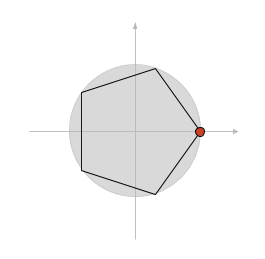

Our final basic shape is the oval. (An oval is also called an ellipse.) An oval is a closed curve that has two radii. For a basic oval, we assume that the radii are vertical and horizontal. An oval with this property can be specified by giving the rectangle that just contains it. Or it can be specified by giving its center point and the lengths of its vertical radius and its horizontal radius.

In this illustration, the oval on the left is shown with its containing rectangle and with its center point and radii:

The oval on the right is a circle. A circle is just an oval in which the two radii have the same length.

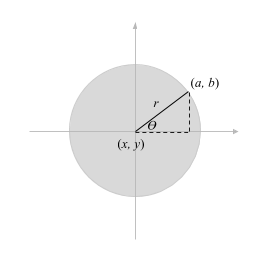

If ovals are not available as basic shapes, they can be approximated by drawing a large number of line segments. The number of lines that is needed for a good approximation depends on the size of the oval. It's useful to know how to do this. Suppose that an oval has center point (x,y), horizontal radius r1, and vertical radius r2. Mathematically, the points on the oval are given by:

where angle takes on values from 0 to 360 if angles are measured in degrees or from 0 to 2π if they are measured in radians. Here sin and cos are the standard sine and cosine functions. To get an approximation for an oval, we can use this formula to generate some number of points and then connect those points with line segments.

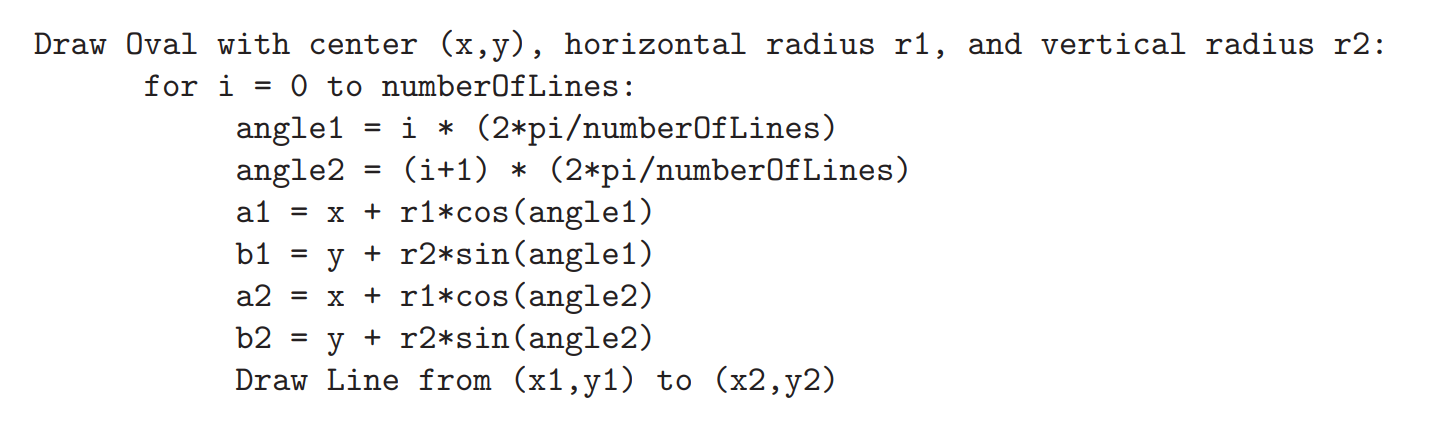

In pseudocode, assuming that angles are measured in radians and that pi represents the mathematical constant π,

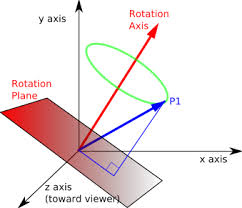

For a circle, of course, We would just have r1 = r2. This is the first time we have used the sine and cosine functions, but it won't be the last. These functions play an important role in computer graphics because of their association with circles, circular motion, and rotation. We will meet them again when we talk about transforms later.

There are two ways to make a shape visible in a drawing.

We can stroke it. Or, if it is a closed shape such as a rectangle or an oval, We can fill it.

Stroking a line is like dragging a pen along the line. Stroking a rectangle or oval is like dragging a pen along its boundary.

Filling a shape means coloring all the points that are contained inside that shape.

It's possible to both stroke and fill the same shape; in that case, the interior of the shape and the outline of the shape can have a different appearance.

When a shape intersects itself, like the two shapes in the illustration below, it's not entirely clear what should count as the interior of the shape. In fact, there are at least two different rules for filling such a shape.

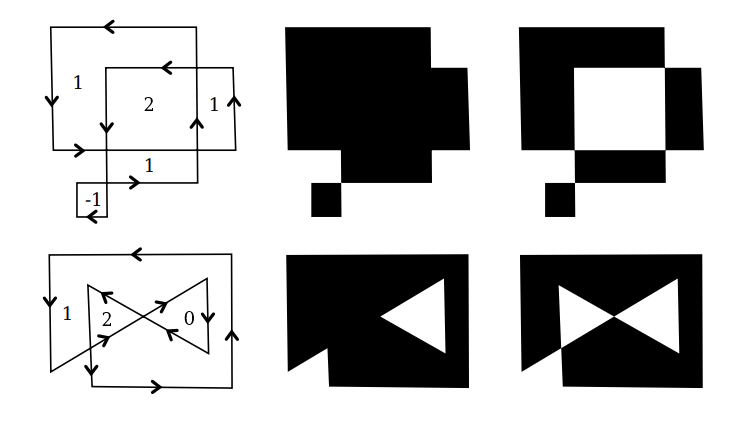

In fact, there are at least two different rules for filling such a shape. Both are based on something called the winding number. The winding number of a shape about a point is, roughly, how many times the shape winds around the point in the positive direction, which we'll take here to be counterclockwise.

Winding number can be negative when the winding is in the opposite direction.

In the illustration, the shapes on the left are traced in the direction shown, and the winding number about each region is shown as a number inside the region.

The shapes are also shown filled using the two fill rules.

For the shapes in the center, the fill rule is to color any region that has a non-zero winding number.

For the shapes shown on the right, the rule is to color any region whose winding number is odd; regions with even winding number are not filled.

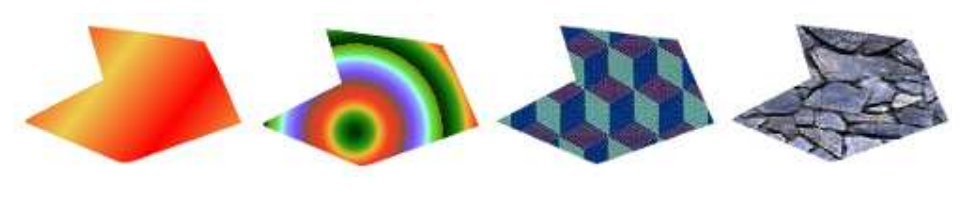

There is still the question of what a shape should be filled with. Of course, it can be filled with a color, but other types of fill are possible, including patterns and gradients.

A pattern is an image, usually a small image. When used to fill a shape, a pattern can be repeated horizontally and vertically as necessary to cover the entire shape.

A gradient is similar in that it is a way for color to vary from point to point, but instead of taking the colors from an image, they are computed. There are a lot of variations to the basic idea, but there is always a line segment along which the color varies. The color is specified at the endpoints of the line segment, and possibly at additional points; between those points, the color is interpolated. The color can also be extrapolated to other points on the line that contains the line segment but lying outside the line segment; this can be done either by repeating the pattern from the line segment or by simply extending the color from the nearest endpoint.

For a linear gradient, the color is constant along lines perpendicular to the basic line segment, so we get lines of solid color going in that direction.

In a radial gradient, the color is constant along circles centered at one of the endpoints of the line segment.

And that doesn't exhaust the possibilities. To give we an idea what patterns and gradients can look like, here is a shape, filled with two gradients and two patterns:

The first shape is filled with a simple linear gradient defined by just two colors, while the second shape uses a radial gradient.

Patterns and gradients are not necessarily restricted to filling shapes. Stroking a shape is, after all, the same as filling a band of pixels along the boundary of the shape, and that can be done with a gradient or a pattern, instead of with a solid color.

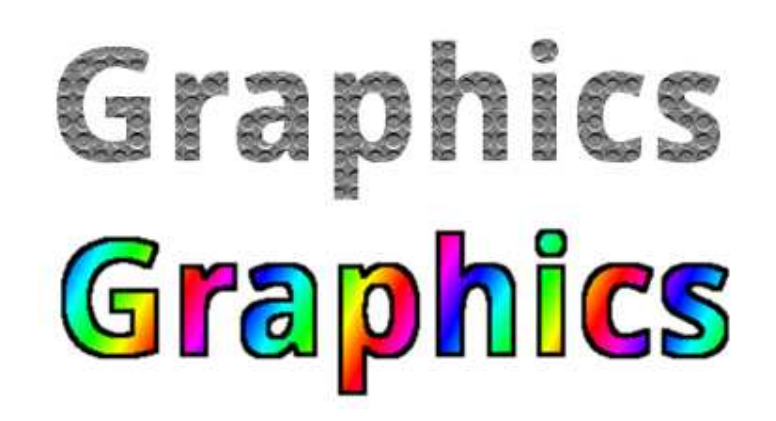

Finally, a string of text can be considered to be a shape for the purpose of drawing it. The boundary of the shape is the outline of the characters. The text is drawn by filling that shape.

In some graphics systems, it is also possible to stroke the outline of the shape that defines the text.

In the following illustration, the string "Graphics" is shown, on top, filled with a pattern and, below that, filled with a gradient and stroked with solid black:

JavaScript is a dynamic programming language that's used for web development, in web applications, for game development, and lots more. It allows we to implement dynamic features on web pages that cannot be done with only HTML and CSS.

Many browsers use JavaScript as a scripting language for doing dynamic things on the web. Any time we see a click-to-show dropdown menu, extra content added to a page, and dynamically changing element colors on a page, to name a few features, we're seeing the effects of JavaScript.